When I saw a post (20+ Common VSAN Questions) by Chuck Hollis on VMware’s corporate blog claiming (extract below) “stunning performance advantage (over Nutanix) on identical hardware with most demanding datacenter workloads” I honestly wondering where does he get this nonsense?

Then when I saw Microsoft Applications on Virtual SAN 6.0 white paper released I thought I would check out what VMware is claiming in terms of this stunning performance advantage for an application I have done lots of work with lately, MS Exchange.

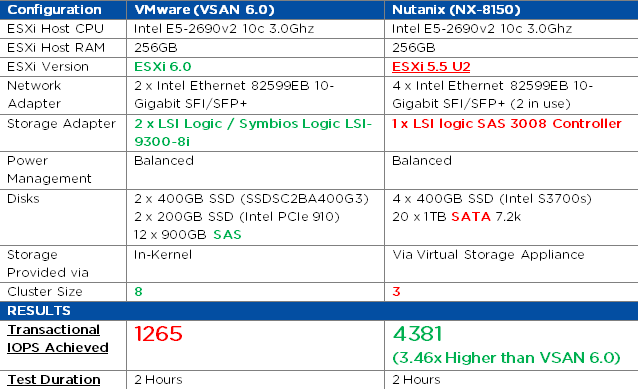

I have summarized the VMware Whitepaper and the Nutanix testing I personally performed in the below table. Now these tests were not exactly the same, however the ESXi Host CPU and RAM were identical, both tests used 2 x 10Gb as well as 4 x SSD devices.

The main differences were ESXi 6.0 for VSAN testing and ESXi 5.5 U2 for Nutanix, I’d say that’s advantage number 1 for VMware, Advantage Number 2 is VMware use two LSI controllers, my testing used 1, and VMware had a cluster size of 8 whereas my testing (in this case) only used 3. The larger cluster size is a huge advantage for a distributed platform, especially VSAN since it does not have data locality, so the more nodes in the cluster, the less chance of a bottleneck.

Nutanix has one advantage, more spindles, but the advantage really goes away when you consider they are SATA compared to VSAN using SAS. But if you really want to kick up a stink about Nutanix having more HDDs, take 100 IOPS per drive (which is much more than you can get from a SATA drive consistently) off the Nutanix Jetstress result.

So the areas where I feel one vendor is at a disadvantage I have highlighted in Red, and to opposing solution in Green. Regardless of these opinions, the results really do speak for themselves.

So here is a summary of the testing performed by each vendor and the results:

The VMware white paper did not show the Jetstress report, however for transparency I have copied the Nutanix Test Summary below.

The VMware white paper did not show the Jetstress report, however for transparency I have copied the Nutanix Test Summary below.

Summary: Nutanix has a stunning performance advantage over VSAN 6.0 even on identical lesser hardware, and an older version of ESXi using lower spec HDDs while (apparently) having a significant disadvantage by not running in the Kernel.

Thanks for enabling comments on your blog, Josh.

First suggestion — please go re-read the VMware white paper you’ve referenced.

You may notice a few things: (1) we’re showing the results of multiple, concurrent workloads — not just one (2) we were very clear that we hadn’t worked to optimize performance.

Pulling out one metric and doing an apples-oranges performance comparison isn’t exactly what knowledgable customers would like to see, no?

Second suggestion — show your work. Precise configuration, testing methodology, tools used, firmware/drivers, exact components, size of mailboxes, size of datastores, number of workers, etc.

Otherwise, it looks like the worst form of benchmarketing.

— Chuck

Aaand, we have a fight!

Josh, Chuck, thank you both for the details. Still, I would like to point out some obvious proverbs we have here in Romania that can be translated to English as following:

1). Stupid is the one paying, not the one selling

2). Two fight, and the 3rd wins

Keep continue the good work. We (customers) love when we see fights between competitor vendors. Really we do!

I agree the fight Chuck continues to wage against Nutanix is not benefiting VMware and is taking the focus off displacing SAN/NAS in the market. I think his energy would be better spent attacking legacy storage.

I think customers are smarter than most vendors give them credit for, which is why Nutanix has 52% of the hyperconverged market

Thanks for the comment Victor.

HI Josh — I can’t speak for everyone else, but I certainly don’t appreciate your misdirection. I was hoping for a more direct response (or retraction) of your claims.

You made a direct competitive performance claim with two serious flaws.

The biggest flaw is that you pulled one metric out of a mixed workload running multiple applications, and then compared it against a single application running in your home lab environment.

100 out of 100 people would agree that this is a useless — even unprofessional — comparison.

Thanks

— Chuck

Hi Chuck,

Your complaining about what you call a competitive performance claim where I provided at worst some data while quoting VMware’s information.

You claimed a stunning performance advantage for VSAN over Nutanix yet provided absolutely nothing to back it up.

Your 100 out of 100 people think whatever claim, is like your original statement from your blog, without basis.

Thanks for the continued attention you bring to Nutanix and my blog, my employer and blog sponsors appreciate the traffic.

Josh

I don’t think it’s useless Chuck. Josh has some very valid points here. You have some valid points too. The problem is two fold.

1) it no longer matters who is “right” or “wrong”. This whole thing between VMware and Nutanix has degenerated into a public bitchslap of absurd proportions. Nobody except the koolaid drinkers on either side *cares* because both sides have absolutely lost credibility in being able to present a valid analysis of anything. It’s self-serving marketing pap and Oranages being compared to Spacefaring Spider Monkies.

2) Oranages are being compared to Spacefaring Spider Monkies by both sides!

If you want to prove something, then work with someone independent. Howard, Stuart, myself…there are several options that have the technical chops to perform third party testing and who are pretty publicly not drinking koolaid from either of the vendors in this bun fight.

Let’s run workloads up on the same hardware. Bounce things back and forth so that teams can optimize for performance. Then let’s run some real world workloads that neither team gets to optimize for, where it is just as a real coalface administrator would see if they were simply putting real production workloads on and having them run.

If you want, I’ll do it. I will probably need some help with hardware and licensing, but I absolutely will test the software on the exact same hardware, and I’ll gladly work closely with teams on both sides throughout the process. I will even put these things into real world production environments and test the same workloads on both setups.

Josh, Chuck…both sides of this bunfight are constantly bickering and dickering and not comparing like for like.

I don’t know about you guys, but I’m tired of the constant heming and hawing and the back and forth showing up in my feeds. Putting aside any other roles I play, hats I wear and so forth…as a systems administrator, someone who reads technology magazines as part of my job and who has to try to decide upon the best technologies for my clients, I’m sick of this.

Let’s put this to bed. Let’s get the performance side of this settled so we can move on to FAR more important questions, like how much performance differences actually matter, ease of use discussions, quality of support and price/performance/watt/sq ft.

Anyways, I’ve vented my rant. You know how to find me.

Hi Josh: What RAID controller did you use in your Nutanix ESXi 5.5 host? VMware used an LSI 9300-8i but yours is unspecified. Would be interested in knowing the make/model along with any add on features used (such as LSILogic’s CacheCade SSD caching software), RAID level used, controller settings, etc.

Hi Larry,

I have updated the table now, It is a single LSI logic SAS 3008 controller which is the standard for the Nutanix NX-8150 node. We don’t use storage controller HW features you mentioned, we do everything in software.

Cheers

Josh

Hi Josh — would you and your readers like to see some interesting head-to-head data? Let me know — thanks!

Hi Chuck,

Its fair to say you and I are unlikely to agree on anything, so let’s accept this, move on and keep things civil.

I think at this point no matter what the quality of head to head data provided (from VMware or Nutanix) that it will be perceived as having low credibility.

I can make ANY storage solution look good or bad, as im sure some of the guys at VMware can as well.

So I invite you to focus on delivering customer value and displacing SANs as this is what I am focusing on.

There is no value in fighting over IOPS, especially 4k ones

I wrote the below post a while back where I quoted two great guys (Vaughn and Chad) where they rightly point out

(http://www.joshodgers.com/2015/04/17/peak-performance-vs-real-world-performance/)

So if Nutanix or VSAN have higher performance in a benchmark, does it really matter? The answer is no.

The only thing that matters is the customer gets the business outcome they desire.

I understand your team may be focusing on multiple competitive benchmarks against Nutanix, It truly is a compliment to be given this level of attention, but I think as I said above, any test will be perceived as having low credibility no matter what detail is provided.

Let’s be honest, if VMware’s testing showed Nutanix performance was better than VSAN, VMware would not publish it, so the only tests which would be published will be in VMware’s favour. The same is true for any competitive companies.

So Where to from here? I propose a truce, let’s focus on talking about our own products value and not throwing mud at each other. But its your call.

Cheers

Thanks Josh. Always interested in how much storage I/O overhead is dumped onto the controller and how much is handled by the host. In today’s world of fast, high core count pCPUs, it seems to make sense to let the host do the work. Will have to look more carefully at Nutanix’ community edition.

FYI – keep your blog going. I’ve been a lurker for years and have found many of your posts very helpful.

HI Josh — this is not about throwing mud, etc. It’s about helping customers make informed choices with the best information available.

Transparency, remember?

Relative levels of performance (and stability under heavy loads) is a serious concern for many consumers of IT. Products that deliver sub-standard performance that then require more hardware (and perhaps licenses) to support a given workload cost more money. Sometimes a lot more money.

And everyone cares about money, right?

If you’d like to take a step in the right direction, how about going back and correcting the post above to reflect your intent?

— Chuck

Hi Chuck,

The Jetstress performance result I posted about Nutanix is 100% legitimate, I personally did the testing and it was on 100% GA code (NOS 4.1.2) and std NX-8150 nodes.

I can tune NOS and get higher performance, but I prefer to leave things default and publish those results as our goal is simplicity.

That being said, if you remove your comment about VSAN having a stunning performance advantage over Nutanix, send me a tweet when its removed and I’ll remove this post.

This post was in response to your claim, if you never made the claim or you go ahead and remove it, there is no reason for my reply.

If you would prefer to run the same configuration in a 3 node cluster with no mixed workloads as I did in my test (which was done well before I saw your result, not in response to it), please feel free to post your result and I’ll update my post with your revised number.

Or give me permission to post like to like tests, you can give me the exact configuration you want VSAN to be setup in (and even validate it before and after I have run the tests via WebEx), I’ll supply the HW and do like for like back to back testing and publish the results (regardless of which solution performs better).

I think all of the above options are reasonable, but I’ll extend you the courtesy too choose the one you prefer.

Cheers

Josh

Hey Chuck, please stop being a hypocrite. Any time a customer posts a case study on how Nutanix displaced EMC (or VMware), your hounds get on them to rescind it based on the MSA they have to sign. Let the customers speak. We know you need to try and stay relevant, but until your step daddy EMC allows for public customer comments, you are simply just looking for attention.

Sincerely,

Every Rational IT person in the industry…

Josh, thanks for keeping it professional. I think the responses to you are a very unprofessional representation of VMware. I’m pretty disappointed honestly.

I like competition

Hi Josh.

This test was run on 1 VM on the cluster? So the other nodes were helping IO wise as the data is spread across all disks in the cluster? How many databases and their size, threads used, db copies did you have it configured for? I’m trying to understand the 4000 IO number in contrast to your best practice document where it achieved 2000 using a 4 node cluster?

It looks like a huge improvement with the same hardware, was it NOS perf improvements?

thanks,

Pete

Hi Pete, in this case, the testing was done prior to VMW releasing their results, but it was a 3 node NX8150 cluster running 2 Jetstress VMs concurrently. Threads were 73 based on Jetstress Autotune, number of databases doesn’t increase performance on Nutanix from a Jetstress perspective, so 1 VMDK with a large DB or many DBs makes no difference, performance is dictated by the working set size. The Best practice document (which I authored) is getting dated and should be updated with newer performance details. We are considering releasing a white paper specifically on Jetstress performance which will cover everything in detail so stayed tuned for that. In the mean time, its safe to say the conclusion of the white paper will be Nutanix delivers more IOPS than is required for any real world Exchange solution on a per node basis and the constraint is really capacity which is not really a constraint especially considering our compression, erasure coding and storage only nodes all of which make cost per mailbox very attractive.

Thanks for the comment.

So this was with a single database per VM? What was the total working set on the cluster? The screen shot of 4300 iops was from a single jetstress report. Did each node achieve 4300 iops, or was that the total for the cluster? Since you said you ran 2 VM’s does that mean your cluster IOPS were 2*4300 or does it not matter the cluster providing ~4300 IOPS regardless of the number of jetstress processes/vm/nodes with active VMs?

I’m trying to understand why you test with n-1 (you had 3 out of 4 nodes in the whitepaper for 2000 iops), wouldn’t you get more iops if you just had a VM on each node?

Pretty impressive numbers compared to my NetApp.

Josh I was looking for some VSAN 6 performance numbers and your post came up in my Google Search. Always enjoy reading fellow VCDX posts! Few quick ones, where did you get the VMware Disks detail from? Intel doesn’t manufacture a SSD 910 Series in 200GB capacity. I also noticed that the Power Management was set to Balanced. I have witnessed a 30% decrease in SSD Performance on VMware ESXi hosts when Power Management isn’t set to Performance-High. Your results may be better for both vendors with that change. Lastly, 1265 IOPs for the VMware tests seems awful. Awful like the way a car drives when someone forget that the left the emergency brake on – don’t you agree? Throwing out the SSDs you would think that (12) 10K SAS drives would give you 1560 IOPS (130 IOPS x 12). Its so bad I’d almost look at the controller firmware, driver, or queue depth configuration. For this controller the Queue Depth should be set to AQLEN=600 do you know it that was done?

All the information regarding VSAN came from VMware, which I simply accepted as being accurate. The 1265 (Jetstress) IOPS is actually a pretty good result, Jetstress as you probably know is has very low outstanding I/O so 1265 Jetstress IOPS is more like 30k+ IOPS when using a tool like SQLIO. As such the number of drives is really irrelevant as the Jetstress is not driving the drives anywhere near as hard as it could if it had say 32 OIO. Thanks for the comment.