A Raw Device Mapping or “RDM” allows a VM to access a volume (or LUN) on the physical storage via either Fibre Channel or iSCSI.

When discussing Raw Device Mappings, it is important to highlight there are two types of RDM modes, Virtual Compatability Mode and Physical Compatibility Mode.

See the following article for a detailed breakdown of the Difference between Physical compatibility RDMs and Virtual compatibility RDMs(2009226).

So how does an RDM compare to a VMDK on a Datastore?

VMware released a white paper called Performance Characterization of VMFS and RDM Using a SAN in 2008, which debunked the myth that RDMs gave significantly higher performance than VMDKs on datastores.

So RDMs have NO performance advantage over VMDKs on a Datastore.

With that in mind, what advantages (if any) do RDMs have today?

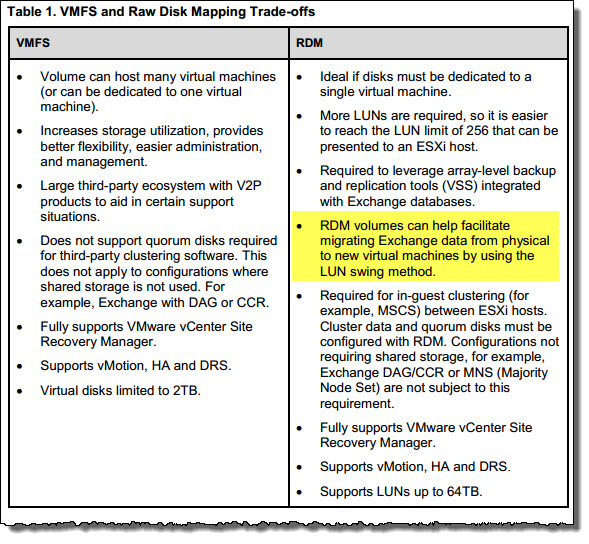

VMware released their Microsoft Exchange 2010 on VMware

Best Practices Guide which has the following Table on page 14 showing the trade-offs between VMFS and RDMs.

I have highlighted one advantage that RDMs still have over VDMKs on datastore style deployments which is the ability to migrate from a physical Exchange server using centralized SAN storage to a VM without data migration.

However, I find the most common way to migrate from a physical deployment to a virtual deployment is by performing Mailbox migrations to virtualized Exchange servers in an ESXi environment. This avoids the complexities of RDMs and ensures no capacity on the shared storage is wasted (i.e.: Siloed).

The table also lists one other advantage for RDMs, being support for up to 64TB drives whereas Virtual Disks were limited to 2TB for VMFS but this limitation has since been lifted to 62TB in vSphere 5.5.

Recommendation: Do not use RDMs for MS Exchange deployments.

As with Local Storage discussed in Part 7, RDM deployments have more downsides (mainly around inefficiency and complexity) than upsides and I would recommend considering other storage options for Virtualized Exchange deployments.

Other options along with my recommended options will be discussed in the next 2 parts of this series and in upcoming posts on Storage performance and resiliency.

Back to the Index of How to successfully Virtualize MS Exchange.

What about backups? With rdm you can facilitate storage based tools, what do you recommend for vmfs? And happy new year.

I will be doing a separate post focusing on backups as its to broad a topic to include in this post. Stay tuned.

Happy new year to you too!

Hi Josh,

I have a question about RDMs on Exchange servers. How do you manage auto-tiering SAN with one Exchange Datastore?

I have many 2 TB Exchanges servers (6-8 in cluster) with multiple VMDKs inside, and only a couple of virtual disks make huge IO on the SAN. So if I follow your recommendations, I should not use RDMs. But that means that all my Exchange LUNs will jump to faster SAN disks, instead of only the ones having huge IO.

I’m not yet on Exchange 2013, still on Exchange 2007, but we’re planning to migrate to 2013.

Are your recommendations still apply in this case or can we consider using RDMs ?

Thanks for your answer, and your blog is a really great bible of good advise!

Julien

Hi Justin,

You’re in luck with your I/O problem as moving to 2013 will reduce your I/O dramatically as I/O is reduced by about 50% from 2007 to 2010, AND another 50% between 2010 and 2013.

That being said I’m sure your aware 2013 is very CPU/RAM intensive compared to 2007/2010.

If you can tell me what kind of storage your using, I can give you more specific advice.

In most cases, VMDKs on multiple datastores is my recommendation, but give me some details and I’ll happily assist.

Thanks for your reply.

We have a HP 3PAR SAN, with 3 disk classes (NL-FC-SSD) and Exchange cluster virtualized with VMware 5.1. Some disks are making a huge IO.

We have 1 exchange server per LUN with all disks in VMDK, so due to high IO, the full LUN is moving from FC to SSD disks.So that makes some 2To space to move in upper disk class.

We’re planning to move to Exchange 2013, and don’t know if we should continue like this (1 Exchange-1LUN-containing all disks) or use smaller LUNs but mapping high IO disks to RDMs.

With your reply, if IO are reduced, I think we can use your recommendations : Setting more CPU and RAM.

But separate VMDKs on multiple datastores is really recommended ?

Julien not Justin

Sorry Julien

The issue with all your VMDKs being on one Datastore (LUN) is that your performance is likely being constrained artificially by the lack of queue depth available and all VMDKs are competing. This generally leads to lower performance and higher latency.

By spreading Exchange database/log VMDKs across multiple datastores you are increasing the available queue depth with every datastore.

Unfortunately I am not an expert with 3PAR storage, or its tiering, but without a doubt, using multiple datastores will not hurt.

I would recommend you proceed with your Exchange 2013 planning as a matter of priority as Exchange 2013 plus spreading the VMDKs across multiple datastores will definitely be a big improvement.

I am releasing several posts in the coming week around VM storage design and VMDK provisioning so stay tuned for those as they have some handy tips as well.

Thanks for your reply.

Indeed, we’ll push to migrate to Exchange 2013 as fast as possible.

I’ll also push to apply your design/optimisation recommendations.

I’ll be waiting to read your new posts on Exchange

Julien