In response to the recent community post “Support for Exchange Databases running within VMDKs on NFS datastores” , the co-authors and I have received lots of feedback, of which the vast majority has been constructive and positive.

Of the feedback received which does not fall into the categories of constructive and positive, it appears to me as if this is as a result of the issue is not being properly understood for whatever reason/s.

So in an attempt to help clear up the issue, I will show exactly what the community post is talking about, with regards to running Exchange in a VMDK on an NFS datastore.

1. Exchange nor the Guest OS is not exposed in any way to the NFS protocol

Lets make this very clear, Windows or Exchange has NOTHING to do with NFS.

The configuration being proposed to be supported is as follows

1. A vSphere Virtual Machine with a Virtual SCSI Controller

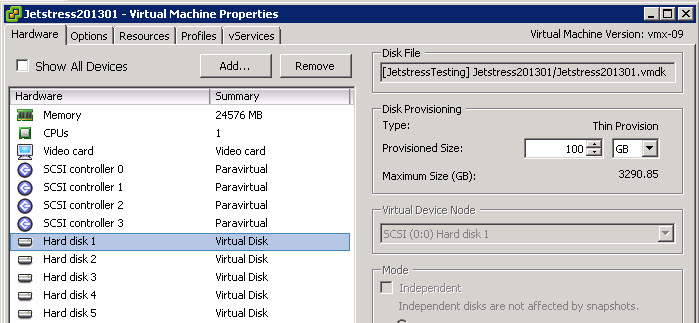

In the below screen shot from my test lab, the highlighted SCSI Controller 0 is one of 4 virtual SCSI controllers assigned to this Virtual machine. While there are other types of virtual controllers which should also be supported, Paravirtual is in my opinion the most suitable for an application such as Exchange due to its high performance and low latency.

2. A Virtual SCSI disk is presented to the vSphere Virtual Machine via a Virtual SCSI Controller

The below shows a Virtual disk (or VMDK) presented to the Virtual machine. This is a SCSI device (ie: Block Storage – which is what Exchange requires)

Note: The below shows the Virtual Disk as “Thin Provisioned” but this could also be “Thick Provisioned” although this has minimal to no performance benefit with modern storage solutions.

So now that we have covered what the underlying Virtual machine looks like, lets see what this presents to a Windows 2008 guest OS.

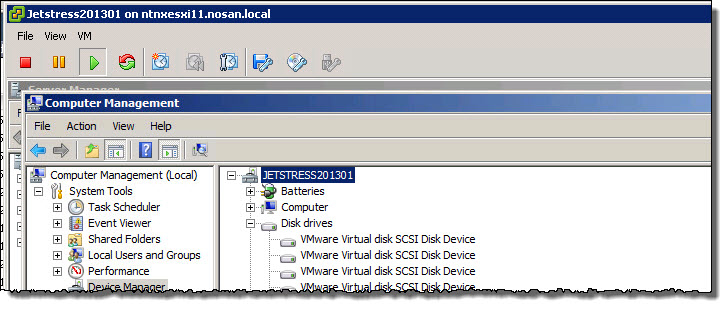

In Computer Management, under Device Manager we can see the expanded “Storage Controllers” section showing 4 “VMware PVSCSI Controllers”.

![]()

Next, still In Computer Management, under Device Manager we can see the expanded “Disk Drives” section showing a number of “VMware Virtual disk SCSI Disk Devices” which each represent a VMDK.

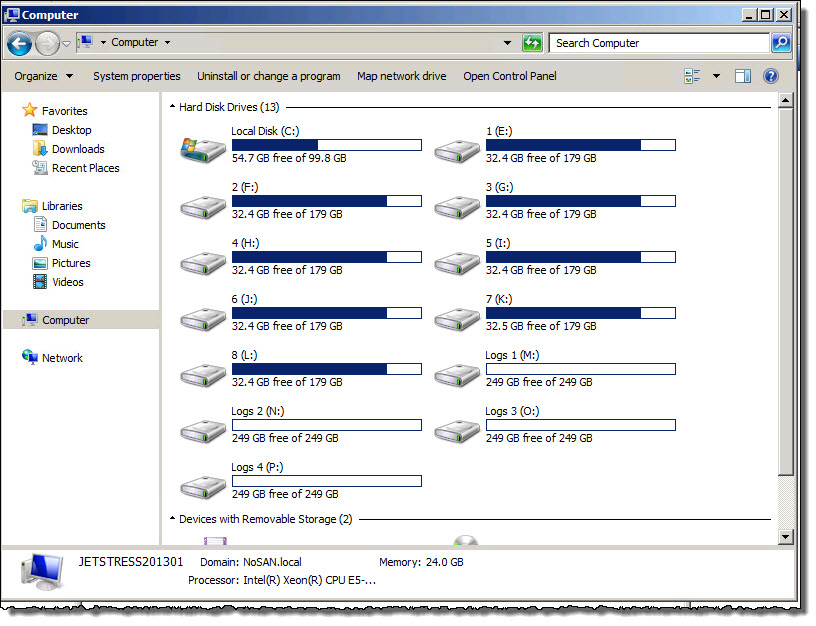

Next we open “My Computer” to see how the VMDKs appear.

As you can see below, the VMDKs appear as normal drive letters to Windows.

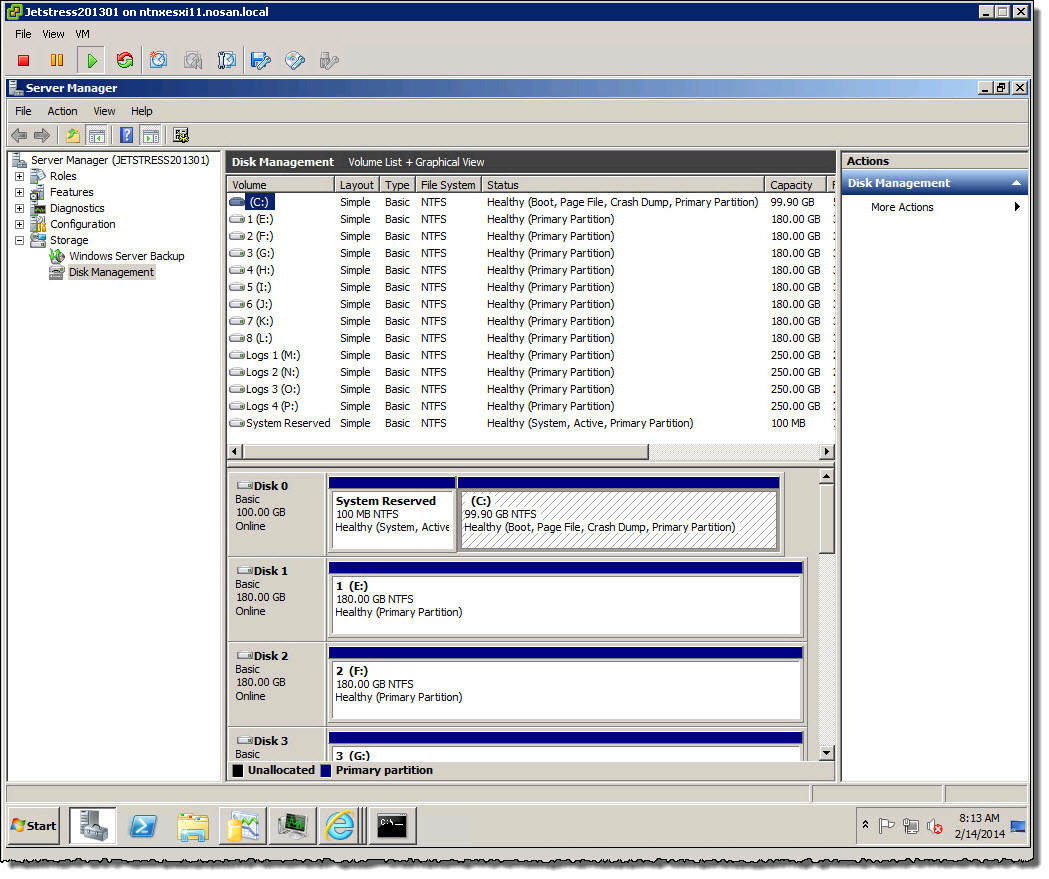

Lets dive down further, In “Server manager” we can see each of the VMDKs showing as an NTFS file system, again a Block storage device.

Looking into one of the Drives, in this case, Drive F:\, we can see the Jetstress *.EDB file is sitting inside the NTFS file system which as shown in the “Properties” window is detected as a “Local disk”.

So, we have a Virtual SCSI Controller, Virtual SCSI Disk, appearing to Windows as a local SCSI device formatted with NTFS.

So what’s the issue? Well as the community post explains, and this post shows, there isn’t one! This configuration should be supported!

The Guest OS and Exchange has access to block storage which meets all the requirements outlined my Microsoft, but for some reason, the fact the VMDK sits on a NFS datastore (shown below) people (including Microsoft it seems) mistakenly assume that Exchange is being serviced by NFS which it is NOT!

I hope this helps clear up what the community is asking for, and if anyone has any questions on the above please let me know and I will clarify.

Related Articles

1. “Support for Exchange Databases running within VMDKs on NFS datastores”

2. Microsoft Exchange Improvements Suggestions Forum – Exchange on NFS/SMB

This is excellent, Josh. Bravo. I had a conversation with an MS consultant a few months back on this exact topic and this article would have been brilliant to show him! In fact, I’m forwarding it on as we speak. Bring forth the revolution!

Thanks for the feedback and hope it helps.

You will be hearing a lot more on this topic in the coming week/months.

Also please be sure to leave your comments/vote on

http://social.technet.microsoft.com/Forums/en-US/c8b4a605-3083-4d0f-b3aa-62ea57cc6d43/support-for-exchange-databases-running-within-vmdks-on-nfs-datastores?forum=exchangesvrdevelopment

&

http://exchange.ideascale.com/a/dtd/support-storing-exchange-data-on-file-shares-nfs-smb/571697-27207

Great article! I would only add that the selection of NFS datastores would default your VMDK disk type to Thin Provisioned. Depending upon performance and IOPS Written to the NFS server, block level storage with “Thick Eager Zeroed” type disks may perform better.

NFS servers that are VAAI aware have the ability to “Reserve Space” with the new VAAI v2 revisions in vSphere 5. Previous to vSphere 5.x the benefit of Thick Eager-Zeroed disks for fully provisioned, pre-zeroed and fully reserved space VMDKs resides solely on block-level storage like iSCSI, Fibre Channel, FCoE, DAS, etc.

I know this is a bit out of scope for the conversation; just my two cents.

Hi Michael

Thanks for the comment, totally agree in your explanation of NFS and thin provisioning by default, as well as the VAAI-NAS comments.

The only thing I would add is what you have said is true for traditional storage solutions, but less so for more modern solutions where the benefit of Eager Zero Thick or thick provisioning LUNs / dedicated spindles etc, are basically eliminated.

I think the important factor regardless of storage protocol is ensuring the storage solution is properly architected to give the required performance for the application. These days the limitations for performance are normally in the controller or storage area network, as opposed to protocol or provisioning type even with traditional shared storage.

Cheers

So, Microsoft supports “file level” storage via SMB 3.0, but NOT NFS? Isn’t that a little hypocritical? Especially in a virtual environment? I don’t believe I have ever seen a valid argument as to why NFS is not supported…..

Except for Microsoft saying “Because I said so”

After meeting with Microsoft to discuss this topic, there was no challenge to this post or the TechNet article below, it is, as I have always said, not a technical issue.

http://social.technet.microsoft.com/Forums/en-US/c8b4a605-3083-4d0f-b3aa-62ea57cc6d43/support-for-exchange-databases-running-within-vmdks-on-nfs-datastores?forum=exchangesvrdevelopment

Hi Josh,

Does this argument apply to SQL clustering too?

Thanks,

Mike

Interestingly, No. Microsoft have a program to support SQL in VMDK on NFS, its just the Exchange team who need to be educated

Thanks for the response, Josh. It’s my understanding that shared SQL clustering using shared VMDKs on NFS is not supported, at least according to the following KB.

http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1037959

I’m wondering why the logic in your Exchange article above does not necessarily apply to this case. Or does Microsoft’s program to support SQL in VMDK on NFS apply here, as well?

Thanks!

Mike

I’ll confirm the status with MS and VMware, and if necessary, expand the scope of this community movement to cover SQL.

My initial impression of the KB is everything in the “Non Shared Disk” section should be supported, no questions asked.

The shared disk solutions, I will clarify the requirements and advise.

Hi Josh,

Did you manage to confirm the status of SQL Clustering and VMware on NFS?

Cheers,

Sim

Sure did, see http://www.joshodgers.com/2015/03/17/microsoft-support-for-ms-sql-on-nfs-datastores/

Listen to your customers Microsoft. We want Exchange supported on NFS. I have been running Oracle, SQL Lotus Notes and 800 other VM’s on VMDK’s in NFS for over 6 years without any corruption issues. This reminds me of the “it can’t be virtualised” nonsense I had to keep hearing as we were moving servers in.

Yes please. more of the same. we need NFS support on Exchange 2010/2013. its the only thing we have to use iSCSI for and its a pain! (apart from boot from SAN for our hosts)

Pingback: Nutanix Acropolis Base Software 4.5 (NOS release)…be still my heart! | nealdolson

Hi Josh,

Great article! Was wondering if I could pick you brain. I see you added lots of VMDKs assuming for your DBs. I read one article mentioning mount points instead of drive letters. I started to to this, but getting monitoring working for diskspace free seems to be a bit of a challenge, not to mention the people I hand this off to to “manage” I think will be confused by the mount points (how to increase the size of disk etc), so I’m leaning going back to drive letters. (still in pilot phase)

Is there a magic number with how many DBs to be on one volume? They claim the iOPS are low and all but I just cant seem to decide on what is best to do here for my situation. We are splitting our DBs by first intial and splitting this across Servers: A-M01, A-M-02, N-Z01, N-Z02. ODD # DBs Active on ODD server, Even # DBs on Active on even server with copies the other way. We have about 2300 mailboxes and 8TB of mail! I realize 4 copies they claim is best, but have to keep SAN costs into considerations with all these DB copies.

Thanks!

Hi Nelson.

Thanks for the feedback.

The reason to use mount points is due to limited number of available drive letters.

For an environment of your size you should be fine with maybe 8 virtual disks per Mailbox server which means using Drives letters wont be a problem.

Jump into the Exchange server role requirements calculator, plug in your user profiles and I’d suggest you would be fine with 3 VMs.

If your running on shared storage, which provides protection against drive failures, then 4 copies is not required. I personally suggest no more than 2 copies per site if running on Shared storage.

2 DAG copies + Storage layer protection will be very resilient and save you a bucket load of money compared to keeping 4 copies which wont give you significantly more availability and will use a lot more compute.

If its on a traditional SAN, try to have dedicated RAID groups for Exchange data, while the IOPS are low, they are larger IOs (32-64k) so not as “low impact” as some people describe it.

Hope that helps.

Cheeers

Hi Josh,

My thoughts were exactly yours regarding the storage, and yes it’s on a traditional San (dell equal logic). I’ll check out the raid groups, typically I leave it on automatic for the luns I carve out. We have a second Datacenter I plan to stretch dag to eventually. I used the sizing tools and followed VMware best practices for exchange along with dell’s to setup the esx environment.

I was really stumped on the volume layout though. Our company grows by aquasistion. And I worry about database size for replication and reseeding. So I will switch back to a drive letter design. How many active copies do you think I can get away with on a single volume? The passive copies will be housed there too. I mean this isn’t a SQL database, just how much iops are really needed for a jet dB and when outlook is in cached mode anyway and your logs on on a different volume.

Appreciate the reply!

Hard to say as im not an expert in Dell EqualLogic but the exchange sizing calc will tell you the IOPS you need. Then id suggest you run Jetstress (see my most recent post on Jetsress on tiered storage) and make sure it can pass a 24hr stress test before moving production onto it. I seperate dbs and logs as they are different IO types but if your constrained then they do work fairly well in the same volume. C

Id suggest run jetstress as per my latest blog and confirm the IO requirements from the exchange sizing spreadsheet before going into production.

DB and logs on the same volume is fine, maybe create multiple thin provisioned datastores to avoid potential datastore level queue depth issues. As for growth, thats always a challenge with a SAN bt keep DAG copies on different volumes and no more than 2 DAG copies as you have protection at the RAID level already. 4 copies is only required fo JBOD deployments