Once you have made the decision on storage platform, and assuming you have chosen to use VMFS or NFS datastores, the next decision is how should my VMDKs be provisioned?

The VMware Exchange 2013 Best Practice Guide does not make mention of disk provisioning options nor does it make any recommendations, however you’re in luck as we will cover all the options along with pros and cons here.

For Exchange 2010, Microsoft state in Understanding Exchange 2010 Virtualization:

Virtual disks that dynamically expand aren’t supported by Exchange.

Virtual disks that use differencing or delta mechanisms (such as Hyper-V’s differencing VHDs or snapshots) aren’t supported.

However I have been unable to find confirmation if this has changed or not for Exchange 2013 in the Exchange 2013 storage configuration options document which does state Thin provisioning for Storage spaces is supported but it does not state that any other form of thin provisioning is or is not supported.

While technically not supported in 2010, there is plenty of experts who understand and recommend thin provisioning including MCM and MVP for Exchange Dustin Smith who in this video talks about some of the considerations and benefits of thin Provisioning for Exchange 2010.

Now on to the topic at hand:

When creating a Virtual Machine, VMDK/s can be provisioned in one of three ways, these are:

1. Thick Provisioned Lazy Zeroed

2. Thick Provisioned Eager Zeroed

3. Thin Provisioned

Starting with Thick Provisioned Lazy Zeroed this means that the VMDK is thick provisioned but only zeroed in a just in time fashion.

The advantages of Thick Provisioned Lazy Zeroed VMDKs include:

1. Faster VM creation time than Eager Zeroed Thick (Minimal if the storage supports VAAI Write Same primitive)

2. The entire VMDKs capacity is reserved making capacity planning easier than Thin Provisioning

The disadvantages of Thick Provisioned Lazy Zeroed VMDKs include:

1. Slower provisioning that Thin Provisioning (although the different is generally minimal)

2. The entire VMDKs capacity is reserved and unavailable for use by other virtual machines.

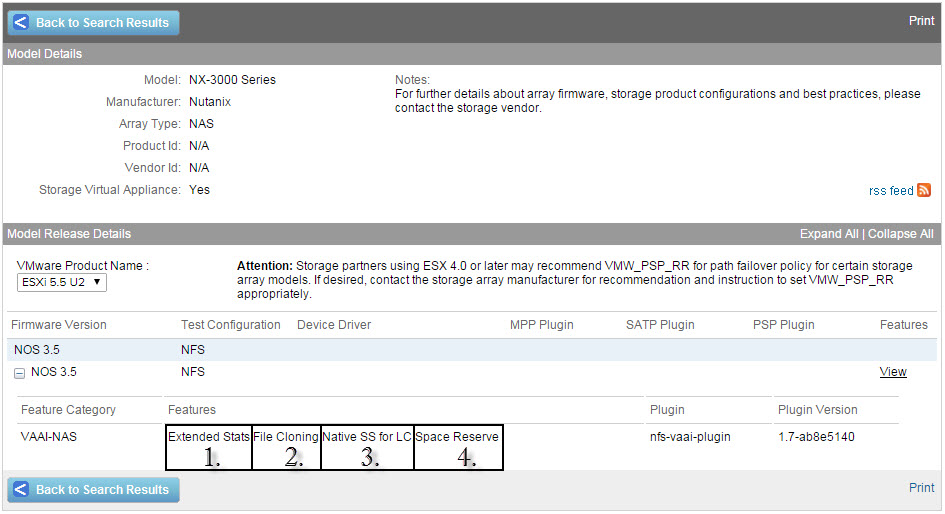

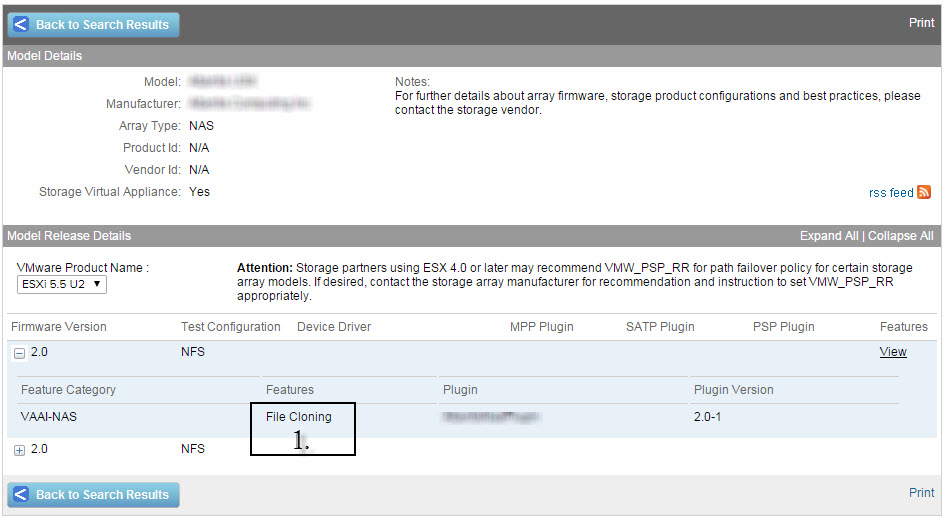

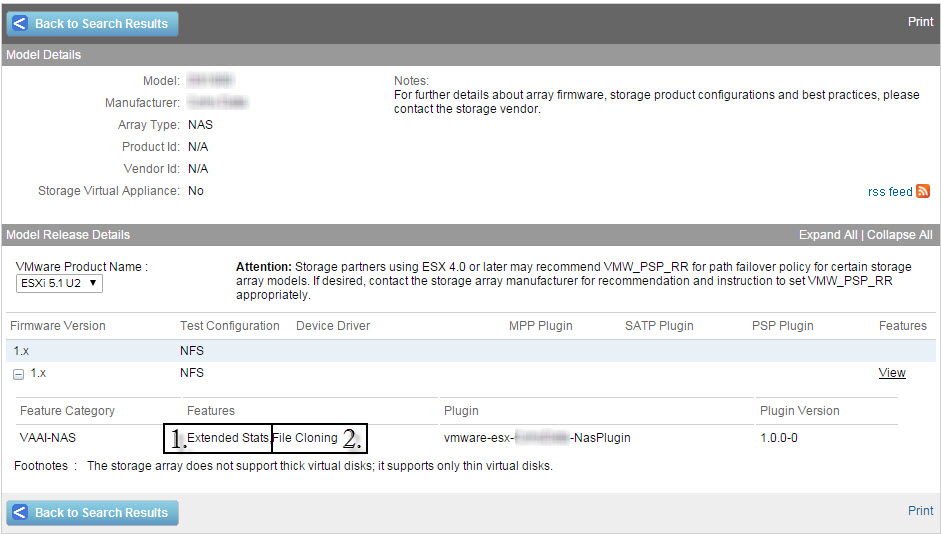

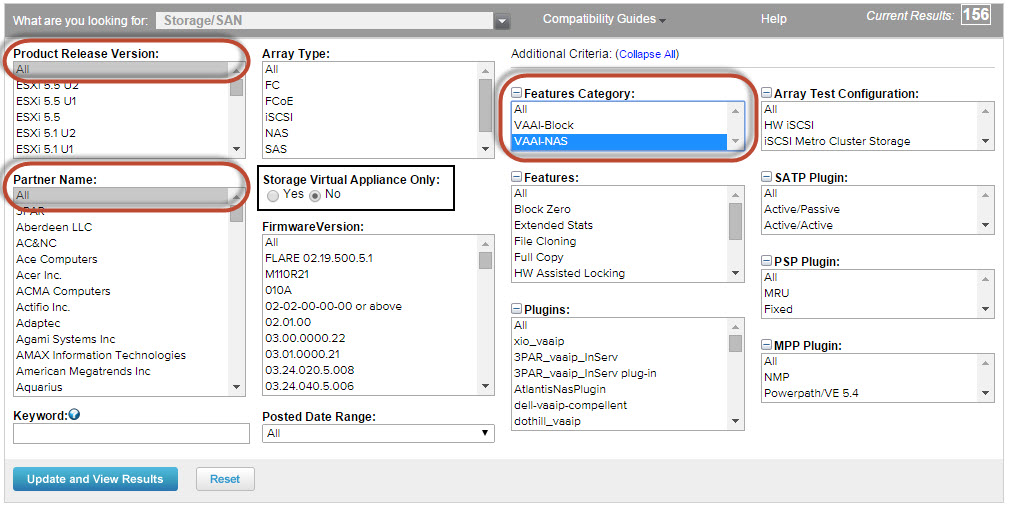

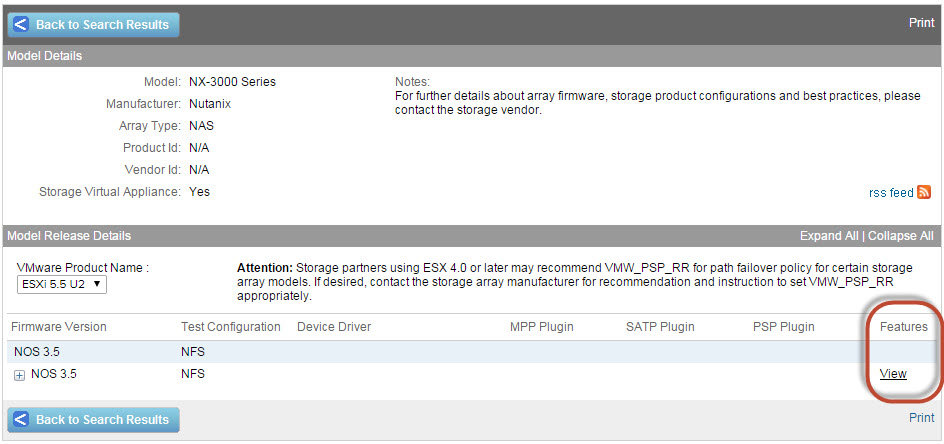

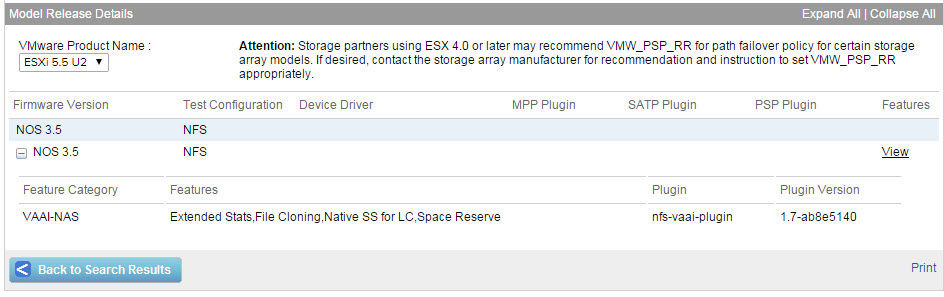

With Thick Provisioned Eager Zeroed (EZT) the VMDK is thick provisioned and all blocked zeroed at the time of creation. Eager Zeroed Thick VMDKs are supported on all VMFS datastores and on NFS datastores which support the VAAI-NAS Reserve Space primitive.

The advantages of EZT VMDKs these days are really minimal but include:

1. Supporting Oracle RAC and VMware Fault Tolerance (neither being applicable to Exchange)

2. Increased performance verses Lazy and Thin Provisioned VMDKs (but more on this topic later).

However there are a number of downsides to this method which include:

1. Slower VM creation times. The time depends on the size of the VMDK/s being created and the speed of your storage as every Gb needs to be zeroed, just like performing a Full (not quick) format on your physical server.

Note: Storage array’s who support VAAI with the “Write Same” primitive can offload the zeroing to the storage array to reduce the load on the ESXi host and speed up provisioning time dramatically.

2. Increased potential for wasted capacity on a datastore.

3. Free space within VMDKs cannot be shared with other VMs which requires every VMDK have some (generally >10% is recommended) free space per VMDK to ensure the VM does not run out of space.

Lastly there is Thin Provision which means the VMDK only takes up the amount of space that data is written too and before each write the block must be zeroed.

The advantages of Thin Provisioning VMDKs include:

1. You can create larger VMDKs with no space utilization penalty making capacity planning and growth easier.

2. Reduce wasted or unused space on the storage

3. Allows for disk space to be overcommitted ensuring maximum utilization and flexibility.

4. Free space in VMDKs is not wasted on the datastore reducing capacity requirements compared to Eager and Lazy Zeroed VMDKs.

5. The impact of SCSI reservations (VMFS datastores ONLY) causing performance issues (increased latency) when thin provisioned virtual machines (VMDKs) grow is no longer an issue as the VAAI Atomic Test & Set (ATS) primitive alleviates the issue of SCSI reservations.

6. Thin provisioned VMs reduce the overhead for Storage vMotion , Cloning and Snapshot activities. Eg: For Storage vMotion it eliminates the requirement for Storage vMotion (or the array when offloaded by VAAI XCOPY Primitive) to relocate “White space”. Note: Storage vMotion should rarely if ever be required for Exchange VMs.

7. Thin provisioning leaves maximum available free space on the physical spindles which should improve performance of the storage subsystem as a whole.

The disadvantages of thin provisioning include:

1. Increased risk of running out of space on a datastore or underlying storage array.

2. Additional write penalty of zeroing a block before writing to it. (again more on performance later in this post).

3. Increased importance of monitoring storage capacity utilization.

4. Not supported for Exchange 2010. Note: However there is no technical inhibitor for using Thin Provisioning but supported options are obviously preferable.

All in all, @FrankDenneman (VCDX #29) sums it up perfectly with his article Thin or thick disks? – it’s about management not performance. I would also suggest considering all other workloads in the environment, not just Exchange when making decisions about Thin Provisioning as it can be very beneficial and a huge cost saving (especially CAPEX) when purchasing new equipment.

Which brings us to our next topic, Thin Vs Thick Provisioning Performance!

There have been many recommendations not to use Thin Provisioning due to the performance impact of Zeroing a block before writing to it. This recommendation has been around for a long time, and like the VMDK on NFS debate appears to have strong options on both sides.

Now for the facts!

From a performance perspective most people are surprised to learn there is no significant performance advantage to using Thick Provisioned (Eager or Lazy Zeroed) VMDKs compared to Thin Provisioned disks.

In addition to that, with the reduction of I/O from Exchange 2007 to 2010 being around 50%, and from 2010 to 2013 another 50% reduction in I/O, Exchange is no longer the huge storage I/O heavy monster it once was.

VMware conducted a Performance Study of VMware vStorage Thin Provisioning back in the ESXi 4.0 days (~2009) which I will briefly summarize.

On page 6 of the performance study the following graph shows the different in performance between Thin and Thick VMDKs during zeroing and post-zeroing.

As you can see the performance is almost identical.

The next chart shows also from Page 6 is a comparison of throughput between thin and thick VMDKs. Again we see the difference is insignificant.

As a result of there being no significant performance impact of using Thin Provisioning, Performance should no longer be considered an objection to using Thin Provisioning!

I recommend taking advantage of the flexibility of using Thin Provisioning and creating larger Thin Provisioned VMDKs which can help simplify capacity management from a VM/OS and application perspective as well as making growth easier for Exchange as mailbox sizes increase over time.

When using thin provisioning always ensure you have your alerting properly set-up with early warning on your vSphere environment AND underlying storage to advise when storage capacity of a datastore or underlying LUN/NFS mount or storage is running low so this can be remediated.

In an upcoming post I will discuss the underlying storage, including provisioning type for LUNs and NFS mounts (i.e.: Thin on Thick / Thin on Thin / Thick on Thick and Thick on Thin).

Recommendations for VMDK provisioning:

1. Check with your storage vendor and unless they have solid justification for not using Thin Provisioning OR you have an operational constraint preventing it, use Thin Provisioned VMDKs. (The pros outweigh the cons in my opinion)

2. When using Thin Provisioning create larger VMDKs to simplify capacity management at the VM and OS/Application layer.

3. When using Thick or Thin provisioning, ensure you test performance using Jetstress and LoadGen with the same provisioning type.

4. Ensure alerting is configured and working to monitor capacity utilization especially when using thin provisioned VMDKs.

Back to the Index of How to successfully Virtualize MS Exchange.

More Information on VMDK and Datastore provisioning options:

1. Example Architectural Decision – Datastore (LUN) and Virtual Disk Provisioning (Thin on Thin)

2. Example Architectural Decision – Datastore (LUN) and Virtual Disk Provisioning (Thin on Thick)

Back to the Index of How to successfully Virtualize MS Exchange.