For many years, I have been asked on countless occasions questions relating to how many VMs can (or should) be placed in one datastore.

In fact, just this morning I was asked this same question, and I decided to whip up a quick post.

I have previously posted an Example Architectural Decision relating to Datastore sizing for Block based storage. What this example was aimed to show was a how things like RPO/RTO and performance should be taken into consideration when choosing a datastore size.

The above example is not a hard and fast rule, but an example of one deployment which I was involved in.

There is a great article written on this topic by VCDX, Jason Boche (@jasonboche), titled “VAAI and the Unlimited VMs per Datastore Urban Myth” which covers in great detail this topic as it relates to block based storage, being iSCSI, FC & FCoE.

But what about NFS, and what about with Hyper-converged solutions like Nutanix?

NFS has gained significant popularity in recent years, and in my opinion, people who know what they are talking about, no longer refer to NFS as “Tier 3 Storage” which was once common.

With traditional storage solutions, generally only a smaller number of controllers can actively serve IO to the one NFS mount, so the limiting factor preventing running more virtual machines per NFS mount, in my experience was performance but things like RPO/RTO were and are important considerations.

NFS does not suffer from SCSI reservations which resulted in increased latency ,which is what VAAI, specifically the Atomic Test & Set or ATS primitive helped too all but eliminate for block based datastores.

LUNs are limited by there queue depth, which in most cases is 32 (sometimes 64). This is also a limiting factor, as all the VMs in a datastore (LUN) share the same queue which can lead to contention. SIOC helps manage the contention by ensuring fairness based on share values, but it does not solve the issue.

NFS on the other hand has a much larger queue depth, in fact its basically unlimited as shown below.

So as NFS does not suffer from SCSI reservations, or queue depth issues, what is limiting us having hundreds or more VMs per datastore?

It comes down to how many active storage controllers are able to service the NFS mount, and the performance of the storage controller/s. In addition to this your business requirements around RPO/RTO. In other words, if a NFS mount is lost, how quickly can you recover.

For most traditional shared storage products,

1. Have only 1 or 2 active controllers – thus potentially limiting performance which would lead to lower VMs per NFS datastore.

2. Do snapshots at the NFS mount layer, so if you need to recover an entire NFS mount, the larger it is, the longer it may take.

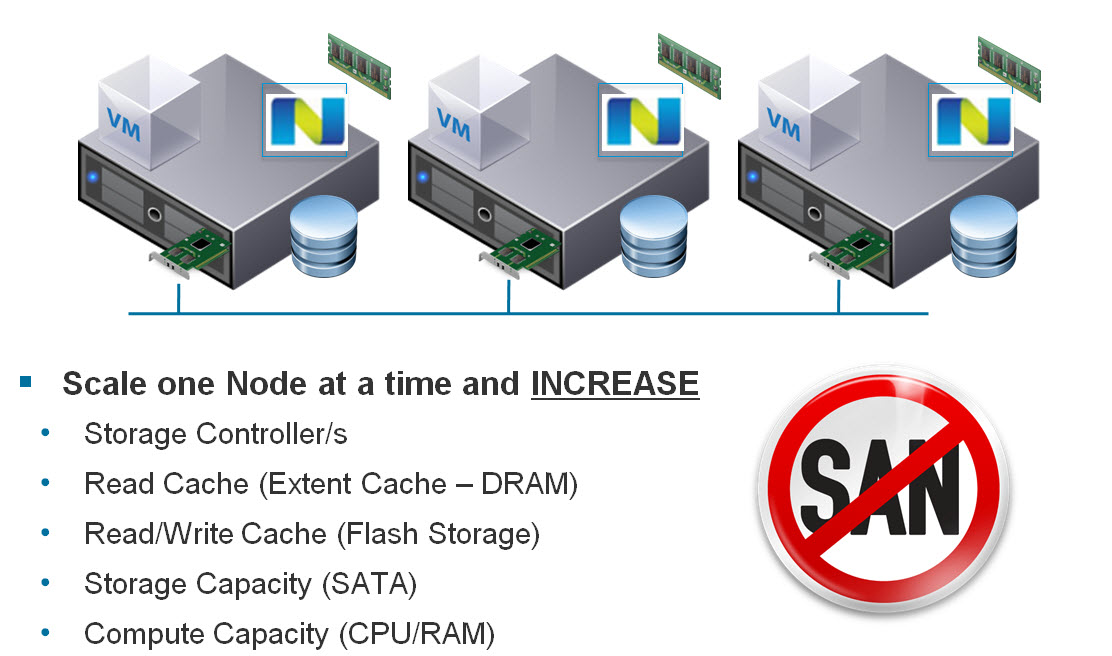

For Nutanix, by default, NFS is used to present the Nutanix Distributed File System (NDFS) to vSphere, however the key difference between Nutanix and traditional shared storage is every controller in the Nutanix cluster, can and does Actively serve IO to any datastore in the cluster concurrently.

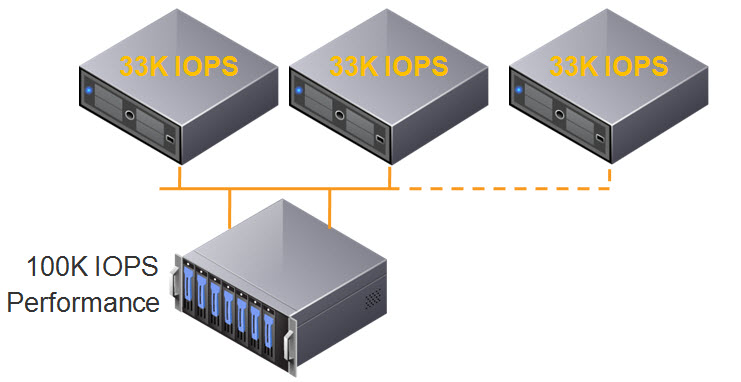

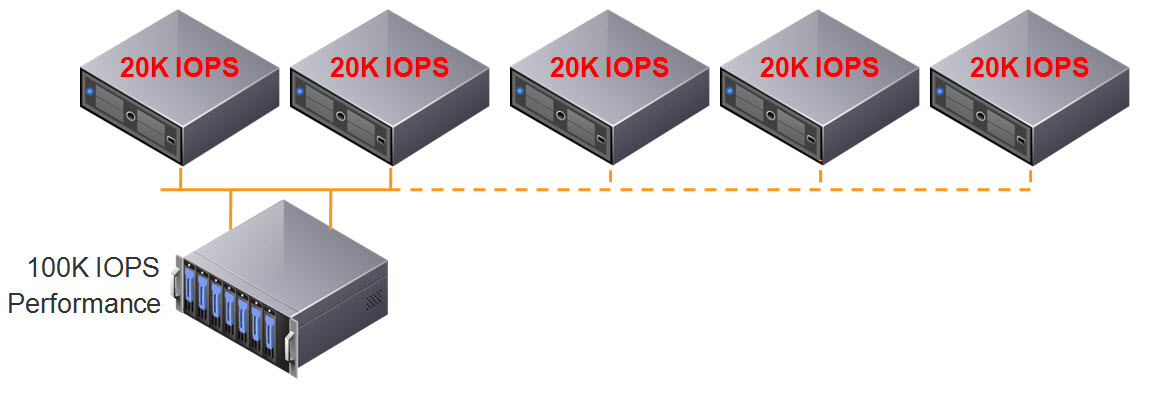

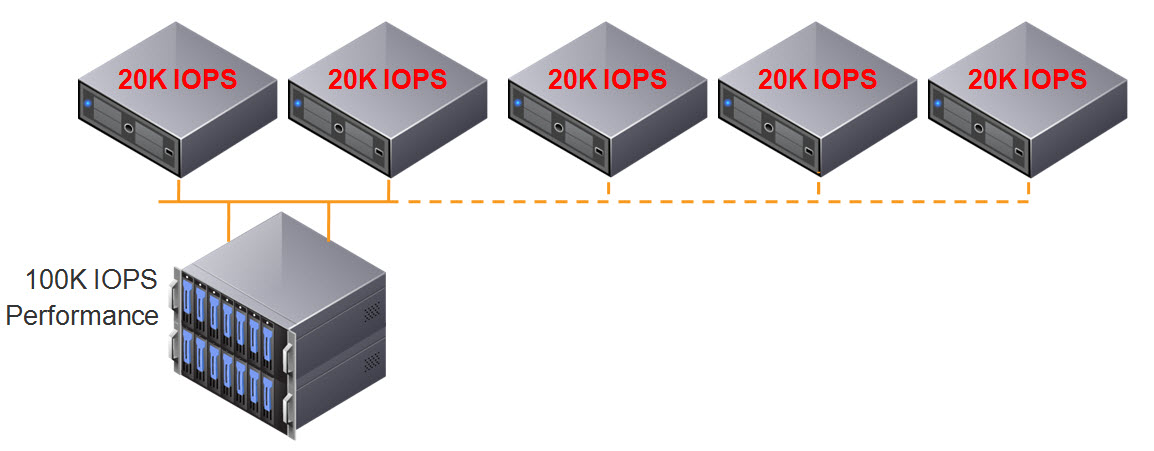

So the limit from a performance perspective is gone thanks to Nutanix scale out, shared nothing architecture, with one virtual storage controller (CVM) per Nutanix node. The number of nodes that’s can be scaled too, is also unlimited. An example of Nutanix ability to scale can be found here – Scaling to 1 million IOPS and beyond, Linearly!

Next what about the RPO/RTO issue? Well, Nutanix does not rely on LUNs or NFS mounts for our data protection (or snapshots), this is all done at a VM layer so your RPO/RTO is now per VM, which gives you much more flexibility.

With Nutanix, you can literally run hundreds or even thousands of VMs per NFS datastore, without performance or RPO/RTO problems thanks to scale out, shared nothing architecture and the Nutanix Distributed File System.

There are some reasons why you may choose to have multiple NFS datastores even in a Nutanix environment, these include, if you want to enable Compression and/or De-duplication which are enabled/disabled on a per container (or datastore) level. As some workloads don’t compress or dedupe well, these types of workloads should be excluded to reduce the overhead on the cluster.

It is important to note, Nutanix uses a concept called a “Storage Pool” which contains all the storage for the Nutanix cluster. On top of a “Storage Pool” you create “Containers” (or datastores). This means regardless of if you have 1 or 100 datastores, they all still sit on top of the one “Storage Pool” which means you still have access to the same amount of storage capacity, with no silos for maximum capacity utilization (and performance!).

Lastly, Nutanix does not suffer from the same availability concerns as traditional shared storage where a single LUN could potentially be lost. This is due to the distributed architecture of the Nutanix solution. For more information on how Nutanix is more highly available than traditional shared storage, check out “Scale out, Shared Nothing Architecture Resiliency by Nutanix”

Check out a screen shot of one cluster with ~800 VMs on a single datastore. Note: The sub millisecond latency and 14K IOPS w/ ~900MBps throughput. Not bad!