In this post I will be discussing Real World Performance of Storage solutions compared to peak performance. To make my point I will be using some car analogies which will hopefully assist in getting my point across.

Starting with the Bugatti Veyron Super Sport (below). This car has a W16 engine with 4 turbochargers and produces 1183BHP (~880kW) and has a top speed (peak performance) of 267MPH (431KPH).

The Veyron achieved the world record 267MPH at Volkswagen’s Ehra-Lessien test track in Germany. The test track has a 5.6 mile long straight. This is one of the very few places on earth where the Veyron can actually achieve its peak performance.

Now for the Veyron to achieve the 267MPH, not only do you need a 5.6 mile long straight, but the Veyron’s rear spoiler must NOT be deployed. Now rear spoilers provide down-force to keep stability so having the spoiler down means the car has a reduced ability to for example take corners.

In addition to requiring a 5.6 mile long straight, the rear spoiler being down, the Veyron can also only maintain its top speed (Peak performance) for 12 minutes before the Veyron’s 26.4-gallon fuel tank will be emptied, which is lucky because the Veyron’s specially designed tyres only last 15mins at >250MHP.

So in reality, while the Bugatti Veyron is one of (if not the fastest) production car in the world, even when you have all your ducks in a row, you can still only achieve its peak performance for a very short period of time (in this example <12 mins) and with several constraints such as reduced ability to corner (due to reduced aerodynamics from the spoiler being down).

Now what about Fuel Economy? The Veyron is rated as follows:

City Driving: 29 L/100 km; 9.6 mpg

Highway Driving: 17 L/100 km; 17 mpg

Top Speed: 78 L/100 km; 3.6 mpg

As you can see, vastly different figures depending on how the Veyron is being used.

There are numerous other factors which can limit the Veyron’s performance, such as weather. For example if the test track is wet, or has strong head winds, the Veyron would not be able to perform at its peak.

So while the Veyron can achieve the 267MPH, In the real world, its average (or Real World) performance will be much lower and will vary significantly from owner to owner.

At this stage you’re probably asking “What has this got to do with Storage”?

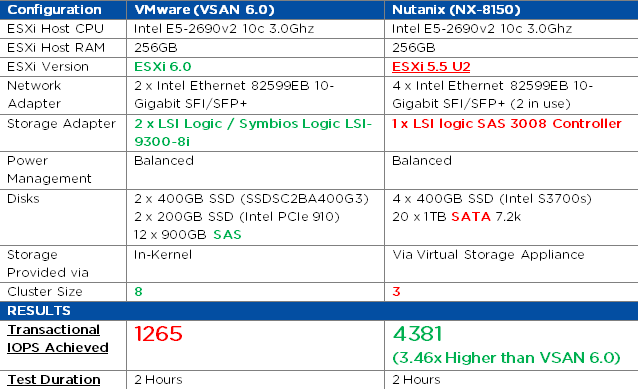

A Storage solution, be it a SAN/NAS or Hyper-Converged, all can be configured and benchmarked to achieve really impressive Peak Performance (IOPS) much like the Veyron.

But these “Peak Performance” numbers can rarely (if at all) be achieved with “Real World” workloads, especially over an extended duration.

To quote two great guys in the Storage industry (Vaughn Stewart & Chad Sakac):

Absolute performance more often than not, is NOT the only design consideration.

I couldn’t agree with this more. The storage vendors are to blame by advertising unrealistic IOPS numbers based on 4K 100% read and now customers expect the same number of IOPS from SQL or Oracle.

The MPG of the Veyron is like the number of IOPS a Storage array can achieve. It Depends on how the Car or Storage Array is used! The car will get higher MPG if used only on the highway just like a Storage Array will get higher IOPS if only used for one I/O profile.

As the IO size and profile of workloads like SQL & Oracle are vastly different than the peak performance benchmarks using 4K 100% Read IOPS, expecting the same IOPS number for the benchmark and SQL/Oracle is as unrealistic as expecting the Veyron to do 267MPH in heavy traffic.

But like I said, Its the storage vendors fault for failing to educate customers on real world performance so many customers have the impression that peak IOPS is a good measurement, and as a result customers regularly waste time comparing Peak Performance of Vendor A and Vendor B, instead of focusing on their requirements and Real World performance.

In the real world, (at least in the vast majority of cases) customers don’t have dedicated storage solutions for one application where peak performance can be achieved, let alone sustained for any meaningful length of time.

Customers generally run numerous mixed workloads on their storage solutions, everything from Active Directory, DNS , DHCP etc which has low capacity/IOPS requirements , Database, Email and Application servers which may have higher capacity/IOPS requirements to achieve and backup with are low IOPS but high capacity.

Each of these workloads have different IO profiles and depending on storage architecture may share storage controllers / SSDs / HDDs / storage networking all of which can result in congestion / contention which leads to reduced performance.

Before you start considering what vendors storage solution is best, you need to first understand (and document) your requirements along with a success criteria which you can validate storage solutions against.

If your requirements are for example:

- Host 10TB of Exchange Mailboxes for 2000 users (~400 random Read/Write 32-64k IOPS)

- Host 20TB Windows DFS solution

- Host 50TB of Backups

- Support 1TB active working set SQL Database

- Host 10TB of misc low IO random workload

- Have Per VM snapshot / backup / replication capabilities

Then there is no point having (or testing) a solution for 100k Random Read 4k IOPS, as your requirement may be less than 10K IOPS of varying sizes and profile.

Consider this:

If the storage solution/s your considering can achieve the 10K IOPS with the I/O profile of your workloads and can be easily scaled, then a solution able to achieve 20K IOPS day 1, is of little/no advantage to a solution which can achieve 12K IOPS since 10K IOPS is all that you need.

Now if your Constraints are:

- 12RU rack space

- 4kw Power

- $200k

Anything that’s larger than 12RU, uses more than 4Kw of Power or costs more than $200k is not something you should spend your time looking at / benchmarking etc since its not something you can purchase.

So to quote Vaughn and Chad again, “Don’t perform Absurd Testing”.

In my opinion, customers should value their own time enough not to waste time doing a proof of concepts (PoCs) on multiple different products when in reality only 2 meet your requirements.

An example of Absurd testing would be taking a Toyota Corolla on a test drive to a drag strip and testing its 1/4 mile performance when you plan to use the car to pick-up the shopping and drop the kids off at school.

Its equally as Absurd to test 100% Random Read 4k IOPS or consider/test/compare a storage solutions <insert your favourite feature here> when its not required or applicable to your use case.

Summary:

- Peak performance is rarely a significant factor for a storage solution.

- Understand and document you’re storage requirements / constraints before considering products.

- Create a viability/success criteria when considering storage which validates the solution meets you’re requirements within the constraints.

- Do not waste time performing absurd testing of “Peak performance” or “features” which are not required/applicable.

- Only conduct Proof of Concepts on solutions:

- Where no evidence exists on the solutions capability for your use case/s.

- Which fall within your constraints (Cost, Size , Power , Cooling etc).

- Which on paper meet/exceed your requirements!

- Where you have a documented PoC plan with a detailed success criteria!

- As long as the solution your considering can quickly, easily and non-disruptively scale, there is no need to oversize day 1.

- If the solution your considering CANT quickly, easily and non-disruptively scale, then its probably not worth considering.

- The performance of a storage solution can be impacted by many factors such as compute, network and applications.

- When Benchmarking, do so with tests which simulate the workload/s you plan to run, not “hero” style 100% read 4k (to achieve peak IOPS numbers) or 100% read 256k (to achieve high throughput numbers).