Let me start by saying I believe complexity is one of the biggest and potentially the most overlooked, issue in modern datacenters.

Virtualization has enabled increased flexibility and solved countless problems within the datacenter. But over time I have observed an increase in complexity especially around the management components which for many customers is a major pain point.

Complexity leads to things like increased cost (both CAPEX & OPEX) and risk, which commonly leads to reduced availability/performance.

In Part 10, I will cover Cost in more depth so let’s park it for the time being.

When architecting solutions for customers, my number one goal is to meet/exceed all my customers’ requirements with the simplest solution possible.

Acropolis is where web-scale technology delivers enterprise grade functionality with consumer-grade simplicity, and with AHV the story gets even better.

Removing Dependencies

A great example of the simplicity of the Nutanix Xtreme Computing Platform (XCP) is its lack of external dependencies. There is no requirement for any external databases when running Acropolis Hypervisor (AHV) which removes the complexity of designing, implementing and maintaining enterprise grade database solutions such as Microsoft SQL or Oracle.

This is even more of an advantage when you take into account the complexity of deploying these platforms in highly available configurations such as AlwaysOn Availability Groups (SQL) or Real Application Clusters (Oracle RAC) where SMEs need to be engaged for design, implementation and maintenance. As a result of not being dependent on 3rd party database products, AHV reduces/removes complexity around product interoperability or the need to call multiple vendors if something goes wrong. This also means no more investigating Hardware Compatibility Lists (HCLs) and Interoperability Matrix’s when performing upgrades.

Management VMs

Only a single management virtual machine (Prism Central) needs to be deployed – even for multi-cluster globally distributed AHV environments. Prism Central is an easy to deploy appliance and since it’s state-less, it does not require backing up. In the event the appliance is lost, an administrator simply deploys a new Prism Central appliance and connects it to the clusters which can be done in a matter of seconds per cluster. No historical data is lost as the data is maintained on the clusters being managed.

Because Acropolis requires no additional components, it all but eliminates the design/implementation and operational complexity for management compared to other virtualization / HCI offerings.

Other supported hypervisors commonly require multiple management VMs and backend databases even for relatively small scale/simple deployments just to provide basic administration, patching and operations management capabilities.

Acropolis has zero dependencies during the installation phase, customers can implement a fully featured AHV environment without any existing hardware/software in the datacenter. Not only does this make initial deployment easy, but it also removes the complexity around interoperability when patching or upgrading in the future.

Ease of Management

Nutanix XCP clusters running any hypervisor can be managed individually using Prism Element or centrally via Prism Central.

Prism Element requires no installation; it is available and performs optimally out-of-the-box. Administrators can access Prism Element via the XCP Cluster IP address or via any Controller VM IP address.

Administrators of Legacy virtualization products often need to use hypervisor-specific tools to complete various tasks requiring design/deployment and management of these components and their dependencies. With AHV, all hypervisor level functionality is completed via Prism providing a true single pane of glass interface for everything from Storage, Compute, Backup, Data Replication, Hardware monitoring and more.

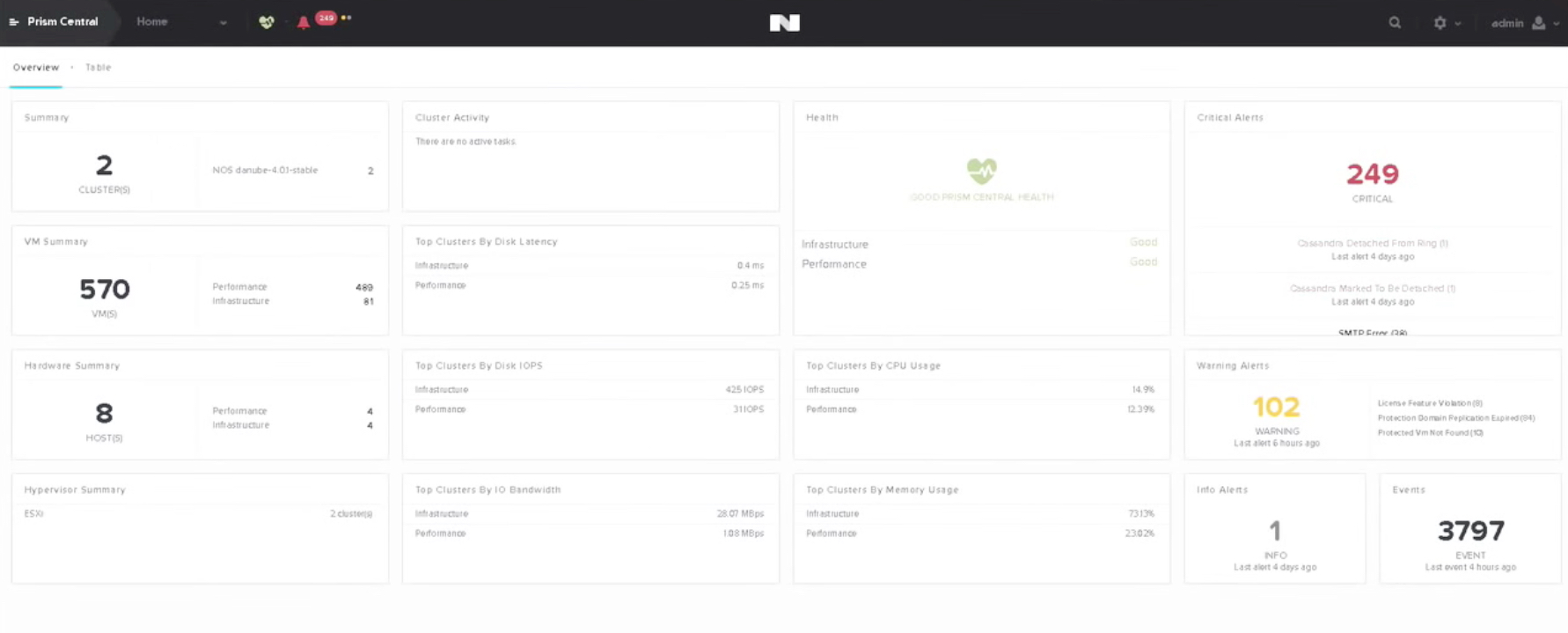

The image below shows the PRISM Central Home Screen that provides a high-level summary of all clusters in the environment. From this screen, you can drill down to individual clusters to get more granular information where required.

Administrators perform all upgrades from PRISM without the requirement for external update management applications/appliances/VMs or supporting back end databases.

PRISM performs one-click fully automated rolling upgrades to all components including Hypervisor, Acropolis Base Platform (formally known as NOS), Firmware and Nutanix Cluster Check (NCC).

For a demo of Prism Central see the following YouTube video:

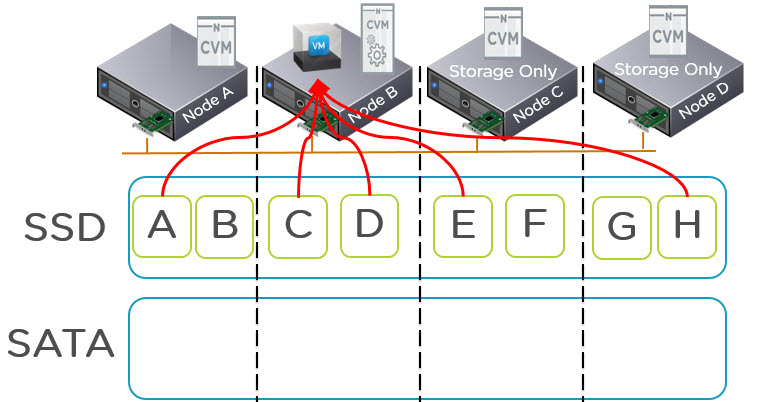

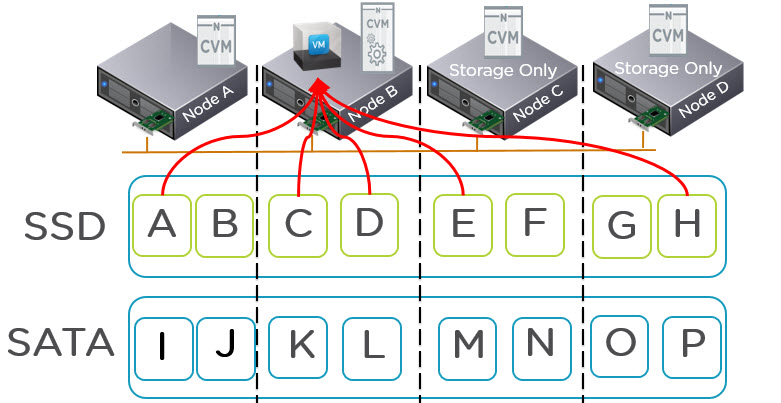

Further Reduced Storage Complexity

Storage has long been, and continues for many customers to be, a major hurdle to successful virtual environments. Nutanix has essentially made storage invisible over the past few years by removing the requirement for dedicated Storage Area Networks, Zoning, Masking, RAID and LUNs. When combined with AHV, XCP has taken this innovation yet another big step forward by removing the concepts of datastores/mounts and virtual SCSI controllers.

For each Virtual Machine disk, AHV presents the vDisk directly to the VM, and the VM simply sees the vDisk as if it were a physically attached drive. There is no in-guest configuration. It just works.

This means there is no complexity around how many virtual SCSI controllers to use, or where to place a VM or vDisk and as such, Acropolis has eliminated the requirement for advanced features to manage virtual machine placement and capacity management such as vSphere’s Storage DRS.

Don’t get me wrong, Storage DRS is a great feature which helps solve serious problems with traditional storage. With XCP these problems just don’t exist.

For more details see: Storage DRS and Nutanix – To use, or not to use, that is the question?

The following screen shot shows just how simple vDisks appear under the VM configuration menu in Prism Element. There is no need to assign vDisks to Virtual SCSI controllers which ensures vDisks can be added quickly and perform optimally.

Node Configuration

Configuring an AHV environment via Prism automatically applies all changes to each node within the cluster. Critically, Acropolis Host Profiles functionality does not need to be enabled or configured, nor do Administrators have to check for compliance or create/apply profiles to nodes.

In AHV all networking is fully distributed similar to the vSphere Distributed Switch (VDS) from VMware. AHV network configuration is automatically applied to all nodes within the cluster without requiring the administrator to attach nodes/hosts to the virtual networking. This helps ensure a consistent configuration throughout the cluster.

The reason the above points are so important is each dramatically simplifies the environment by removing (not just abstracting) many complicated design/configuration items such as:

- Multipathing

- Deciding How many datastores are required & what size each should be

- Considering how many VMs should reside per datastore/LUN.

- Configuration maximums for Datastores / Paths

- Managing consistent configuration across nodes/hosts

- Managing Network Configuration

Administrators can optionally join Acropolis built-in authentication to an Active Directory domain, removing the requirement for additional Single Sign-On components. All Acropolis components include High Availability out-of-the-box, removing the requirement to design (and license) HA solutions for individual management components.

Data Protection / Replication

The Nutanix CVM includes built-in data protection and replication components, removing the requirement to design/deploy/manage one or more Virtual Appliances. This also avoids the need to design, implement and scale these components as the environment grows.

All of the data protection and replication features are also available via Prism and, importantly, are configured on a per VM basis making configuration easier and reducing overheads.

Summary

In summary the simplicity of the AHV eliminates:

- Single points of failures for all management components out of the box

- The requirement for dedicated management clusters for Acropolis components

- Dependency on 3rd Party Operating Systems & Database platforms

- The requirement for design, implementation and ongoing maintenance for Virtualization management components

- The need to design, install, configure & maintain a Web or Desktop type

- Complexity such as

- The requirement to install software or appliances to allow patching / upgrading

- The requirement for an SME to design a solution to make management components highly available

- The requirement to follow complex Hardening Guides to achieve security compliance.

- The requirement for additional Appliances/interfaces and external dependencies (i.e.: Database Platforms)

- The requirement to license features to allow Centralised configuration management of nodes.