Datastores are a logical construct which allows DAS,SAN or NAS storage is presented to ESXi. In the case of SAN and NAS storage it is generally “shared storage” which enabled virtualization features such as HA, DRS and vMotion.

When storage is presented to ESXi from DAS or SAN (block based) storage it is formatted with VMFS (Virtual Machine File System) and when storage is presented via file storage (NFS), it is presented to ESXi as an NFS mount.

Regardless of datastores being presented via block (iSCSI,FC,FCoE) or file based (NFS) protocols, they both host VMDKs (Virtual Machine Disks) which are block based storage. In the case of NFS, the SCSI commands are emulated by the hypervisor. This process is explained in Emulation of the SCSI Protocol and can be compared to Hyper-V SMB 3.0 (File) storage with VHDX which also emulates SCSI commands over File (SMB 3.0) storage.

The following diagram is courtesy of http://pubs.vmware.com and shows “host1” and “host2” runnings VMs across VMFS (block) and NFS (file) datastores. Note the VMs residing on datastore1 and datastore2 all have .vmx and .vmdk files and operate in the exact same way from the perspective of the VM, Guest OS and applications.

The next paragraph is controversial and may be hotly debated, but to the best of my knowledge and the countless industry experts (from several different vendors) I have investigated this with over the last year including VMware’s formal position, it is completely true and I welcome any credible and detailed evidence to the contrary! (I even asked this question of Microsoft here).

Using either VMFS or NFS datastores meets the technical requirements for Exchange, being Write Ordering, Forced Unit Access (FUA) and SCSI abort/reset commands and because drives within Windows are formatted with NTFS which is a journalling file system as such the requirement to protect against Torn I/O is also achieved.

With that being said, Microsoft currently do not support Exchange running in VMDKs on NFS datastores.

The below is a quote from Exchange 2013 storage configuration options outlining the storage support statement for MS Exchange with the underlined section applying to NFS datastores.

All storage used by Exchange for storage of Exchange data must be block-level storage because Exchange 2013 doesn’t support the use of NAS volumes, other than in the SMB 3.0 scenario outlined in the topic Exchange 2013 virtualization. Also, in a virtualized environment, NAS storage that’s presented to the guest as block-level storage via the hypervisor isn’t supported.

If your interested in finding out more information about MS Exchange running in VMDKs on NFS datastores, see the links at the end of this post.

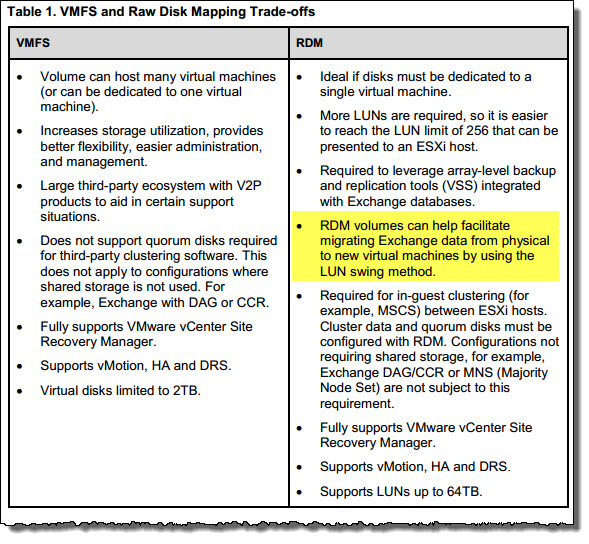

Now let’s discuss the limitations of Datastore’s and what impact they have on vSphere environments with MS Exchange deployments and why.

Number of LUNs / NFS Mounts : 256

This can be a significant constraint when using one or multiple datastore/s per Exchange MBX/MSR VM however in my opinion this should not be necessary nor is it recommended.

Generally Exchange VMDKs can be mixed with other VMs in the same datastore providing there is not a performance constraint. As such keep high I/O VMs (including other Exchange VMs) in different datastores.

As discussed in Part 10, if legacy per LUN snapshot based backup solutions are being used then in-Guest iSCSI may have to be used but for new deployments especially where new storage will be purchased, per LUN solutions should not be considered!

Number of Paths per ESXi host : 1024

If using VMFS datastores, the simple fact is 4 paths per LUN is the maximum you can use if you plan to reach or near the limit of 256 datastores. This is not a performance limiting factor with any enterprise grade storage solution.

Number of Paths per LUN : 32

If you configure 32 paths per LUN, you straight away restrict yourself to 32 LUNs per ESXi host (and vSphere cluster) so don’t do it! As mentioned earlier 4 paths per LUN is the maximum if you plan to reach 256 datastores. Do the math and this limit is not a problem.

Number of Paths per NFS mount : N/A

NFS mounts connect over IP to the Storage Controllers via vNetworking so there is no maximum as such although with NFS v3 only one physical NIC will be used at a time per IP subnet. This topic will be covered in a future post on vNetworking for MS Exchange.

VMFS Datastore Maximum Size : 64TB

256 LUNs x 64TB = 16,384TB per vSphere HA cluster. This is not a problem.

NFS Datastore Maximum Size : Varies depending on vendor

The limit depends on vendor but its typically higher than the VMFS limit of 64TB per mount, with some vendors not having a limit.

So you’re safe to assume >=16.384TB but always check with your current or potential storage vendor.

ESXi hosts per Volume (Datastore) : 64 (Note: HA cluster limit of 32)

As a vSphere cluster is limited to 32 currently, this limitation isn’t really an issue. With vSphere 6.0 it is expected cluster size will increase to 64 but ESXi hosts per Volume is not a maximum I have ever heard of being reached.

Recommendations:

1. If you require a fully supported configuration use VMFS datastores.

2. Maximum 4 paths per LUN to ensure maximum scalability (if required).

3. Consider the underlying storage configuration and type of a datastore before deploying MS Exchange.

4. Do not deployment MS Exchange VMDKs onto datastores with other high I/O workloads

5. When mixing workloads on a datastore, enable SIOC to ensure fairness between workloads in the event of storage contention.

6. Spread Exchange VMDKs across multiple datastores for maximum performance and resiliency. e.g.: 12 VMDKs per Exchange MBX/MSR VM across 4 mixed workload datastores.

7. Do not use dedicated datastore/s per MS Exchange database or VM. (This is unnecessary from a performance perspective)

8. If choosing to use NFS Datastores, purchase Premier Support from Microsoft and negotiate support for NFS. Microsoft do provide support for many customers with Premier Support with Exchange running on NFS datastores although it is not their preference.

On a final note, in future posts I will be discussing in detail the underlying storage from a performance and availability perspective with Database availability Groups in mind.

Thank you to @mattliebowitz for reviewing this post. I highly recommend his book Virtualizing Business Critical Applications by VMware Press. I purchase and reviewed this book mid 2014, well worth a read!

Back to the Index of How to successfully Virtualize MS Exchange.

Articles on MS Exchange running in VMDK on NFS datastores

1. “Support for Exchange Databases running within VMDKs on NFS datastores”

2. Microsoft Exchange Improvements Suggestions Forum – Exchange on NFS/SMB

3. What does Exchange running in a VMDK on NFS datastore look like to the Guest OS?

4. Integrity of I/O for VMs on NFS Datastores Series

Part 1 – Emulation of the SCSI Protocol

Part 2 – Forced Unit Access (FUA) & Write Through

Part 3 – Write Ordering

Part 4 – Torn Writes

Part 5 – Data Corruption