I saw a tweet recently (below) which inspired me to write this post as there is still a clear misunderstanding of the benefits VAAI provides (even with Virtual Storage Appliances).

I have removed the identity of the individual who wrote the tweet and the people who retweeted this as the goal of this post is solely to correct what I believe is mis-information.

My interpretation of the tweet was (and remains) if a solution uses a Virtual Storage Appliance (VSA) which resides on the ESXi host then VAAI is not providing any benefits.

My opinion on this topic is:

Compared to a traditional centralised NAS (such as a Netapp or EMC Isilon) providing NFS storage with VAAI-NAS support, a Nutanix or VSA solution has exactly the same benefits from VAAI!

My 1st reply to the tweet was:

The test I was referring to with Netapp OnTap Edge can be found here which was posted in Jan 2013, well prior to my joining Nutanix when I was working for IBM where I had been evangelising VAAI/VCAI based solutions for a long time as VAAI/VCAI provides significant value to VMware customers.

The following shows the persons initial reply to my tweet.

I responded with the below mentioning I will do a blog which is what you’re reading now.

I went onto provide some brief replies as shown below.

The main comments from this persons tweets I would summarize (rightly or wrongly) below:

- VAAI is designed only to offload functions externally (or off the ESXi host)

- He/She had not seen any proof of performance advantages from VAAI on VSAs

- Its broken logic to use VAAI with a VSA

Firstly, I would like comment on VAAI being designed to offload functions externally (or off the ESXi host). I don’t disagree VAAI has some functions designed to offload to the (centralised) array but VAAI also has numerous functions which are designed to bring other efficiencies to a vSphere environment.

An example of a feature designed to offload to a central array is the “XCOPY” primitive.

A simple example of what “XCOPY” or Extended Copy provides is offloading a Storage vMotion on block based storage (i.e.: VMFS over iSCSI,FC,FCoE not NFS) to the array so the ESXi host does not have to process the data movement.

This VAAI primitive would likely be of little benefit in a VSA environment where the storage is presented is block based and Storage DRS for example was used. The data movement would be offloaded from ESXi to the VSA running on ESXi and host would still be burdened with the SvMotion.

However XCOPY is only one of the many primitives of VAAI, and VAAI does alot more than just offload Storage vMotions.

For the purpose of this post, I will be discussing VAAI with Nutanix whos Software defined storage solution runs in a VM on every ESXi host in a Nutanix cluster.

Note: This information is also relevant to other VSAs which support VAAI-NAS.

So what benefit does VAAI provide to Nutanix or a VSA solution running NFS?

Nutanix deploys by default with NFS and supports the VAAI-NAS primitives which are:

- Full File Clone

- Fast File Clone

- Reserve Space

- Extended Statistics

Note: XCOPY is not supported on NFS, importantly and specifically speaking for Nutanix it is not required as SvMotion will be rarely if ever used with Nutanix solutions.

See my post “Storage DRS and Nutanix – To use, or not to use, that is the question?” for more details on why SvMotion is rarely needed when using Nutanix.

For more details of VAAI primitives, Cormac Hogan (@CormacJHogan) wrote an excellent post which can be found here.

Now here is an example of a significant performance benefits of VAAI with Nutanix.

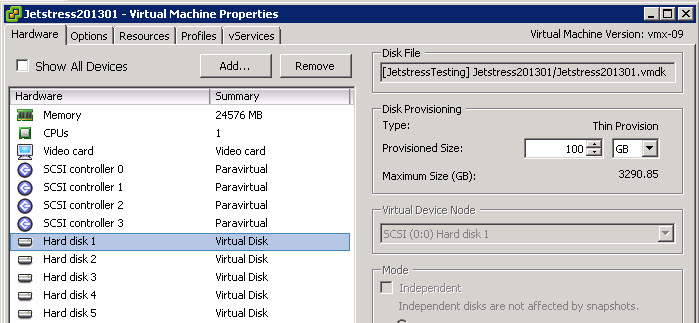

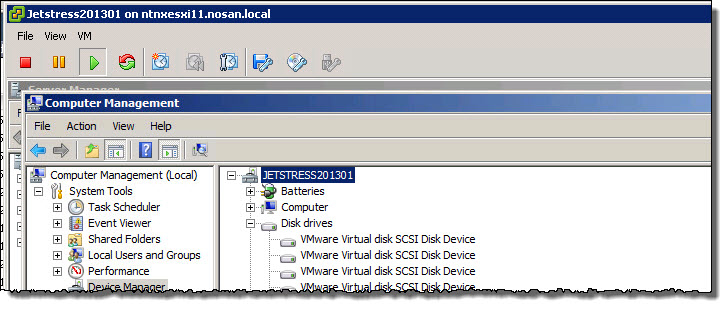

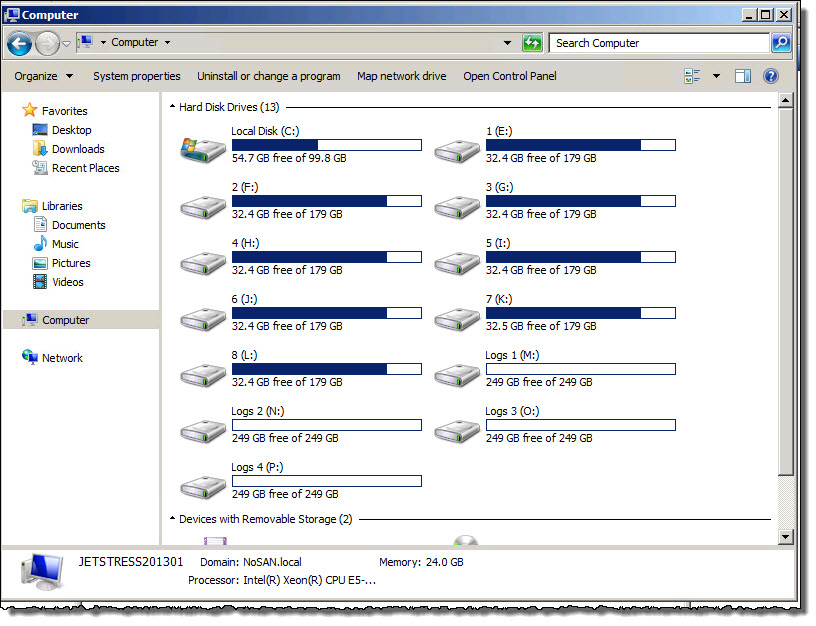

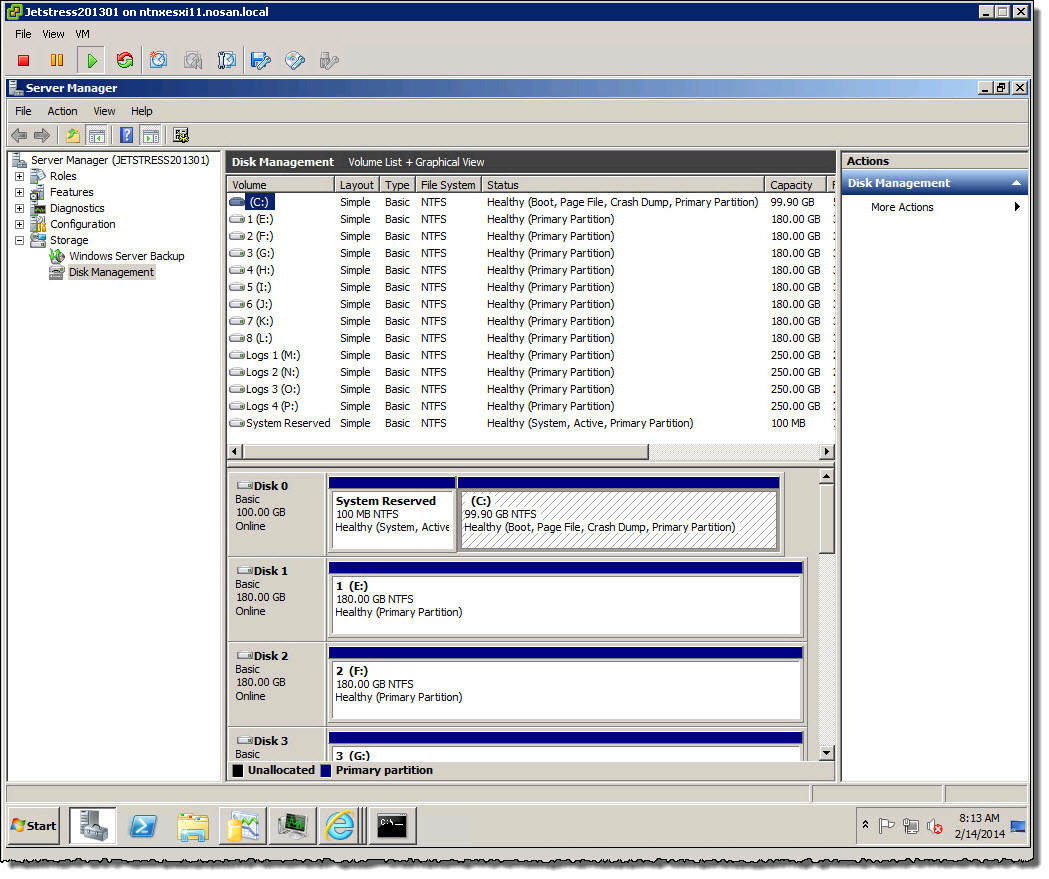

Lets look at Clone of a VM on a Nutanix platform, the VMs details are below.

The VM I have used for this test resides on a datastore called “Management” (as per the above image) which presented via NFS and has VAAI (Hardware Acceleration) enabled as shown below.

Now if I do a simple clone of a VM (as shown below) if the VM is turned on, VAAI-NAS is bypassed as the “Fast File Clone” primitive only works on VMs which are powered off.

So a simple way to test the performance benefits of VAAI on any platform (including Hyper-converged such as Nutanix, a Virtual Storage Appliance (VSA) such as Netapp Ontap Edge or traditional centralised SAN or NAS) is to clone a VM while powered on then shut-down the VM and clone it again.

I performed this test and the first clone with the VM powered on started at 1:17:23 PM and finished at 1:26:12 PM, so a total of 8 mins 49 seconds.

Next I shut down the VM and repeated the clone operation.

As we can see in the above screen capture from the 2nd clone started at 1:26:49 PM and finished at 1:26:54 PM, so a total of 5 seconds.

The reason for the huge difference in the speed of the two clones is because VAAI-NAS “Fast File Clone” primitive offloaded the 2nd clone to the Nutanix platform (which runs as a VM on the ESXi host) which has intelligently cloned the VM (using metadata resulting in almost zero data creation) as opposed to 1st clone where VAAI-NAS was not used which resulted in the hypervisor and storage solution having to read 11.18GB of data (being the source VM – Admin01) and write a full copy of the same data resulting in effectively >22GB of data movement in the environment.

Now from a capacity savings perspective, a simple way to demonstrate the capacity savings of VAAI on any platform is to clone a VM multiple times and compare the before and after datastore statistics.

Before I performed this test I captured a baseline of the Management datastore as shown below.

The above highlighted areas show:

- Virtual Machines and Templates as 83

- Capacity 8.49TB

- Provisioned Space 7.09TB

- Free Space 7.01TB

I then cloned the Admin01 VM a total of 7 times.

Immediately following the last clone completing I took the below screen shot of the Management datastores statistics.

The above highlighted areas in the updated datastore summary show:

- Virtual Machines and Templates INCREASED by 7 to 90 (as I cloned 7 VMs)

- Capacity remained the same at 8.49TB

- Provisioned Space INCREASED to 7.29TB as we cloned 7 x ~40Gb VMs (Total of ~280GB)

- Free Space REMAINED THE SAME at 7.01TB due to VAAI-NAS Fast File Clone primitive working with the Nutanix Distributed File System.

So VAAI-NAS allowed a VM of ~11GB of used storage (~40GB provisioned) to be cloned without using any significant additional disk space and the clones were each done in between 5 and 7 seconds each.

So some of the benefits VAAI-NAS provides to Nutanix (which some people would term as a VSA type solution) include:

- Near instant VM cloning via vSphere Client/s (as shown above)

- Near instant Horizon View Linked Clone deployments (VCAI) – Similar to example shown.

- Near instant vCloud Director clones (via FAST Provisioning) – Similar to example shown.

- Major capacity savings by using Intelligent cloning rather than Full Clones (As shown above)

- Lower CPU overhead for both ESXi hosts AND Nutanix Controller VM (CVM)

- Ability to create EagerZeroThick VMDKs on NFS (e.g.: To support Fault Tolerance & clustered workloads such as Oracle RAC)

- Enhanced ability to get statistics on file sizes , capacity usage etc on NFS

In Summary:

Overall I would say that VMware have developed an excellent API in VAAI and Nutanix along with VSA providers having support for VAAI provides major advantages and value to our joint customers with VMware.

It would be broken logic NOT to leverage the advantages of VAAI regardless of storage type (VSA, Nutanix or traditional centralized SAN/NAS) and for the vast majority of vSphere deployments, any storage solution not supporting (or having issues/bugs with) VAAI will have significant downsides.

I am looking forward to ongoing developments from VMware such as vVols and VASA 2.0 to continue to enhance storage of vSphere solutions in the future.

I hope customers and architects now have the correct information to make the most effective design and purchasing recommendations to meet/exceed customer requirements.