If you’ve not read Parts 1 through 5, we have already proven several claims by HPE Simplivity regarding Nutanix to be false, as well as explored the misleading way in which HPE SVT promote data efficiency.

We continue with Part 6 where we will discuss HPE’s claim that “Nutanix data efficiency stats are stealthier than a ninja”. (below)

#HPE #HyperConverged 380 #HPEDare2Compare #Nutanix #HPEDiscover https://t.co/YYMycBpI7i pic.twitter.com/o2rPsabIzE

— HPE GreenLake (@HPE_GreenLake) June 5, 2017

While HPE’s claim is an attempt to create Fear, Uncertainty and Doubt (FUD), HPE are partially correct in that we (Nutanix) have done a very poor job of promoting the arguably market leading data efficiency that Nutanix provides.

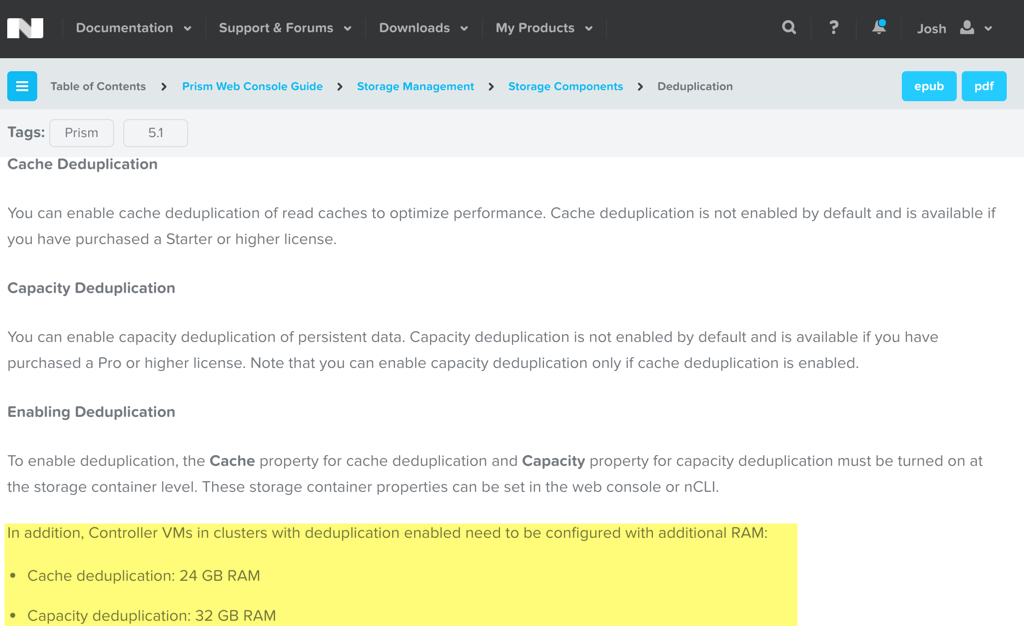

In fact, several colleagues and I created a feature request to properly report in a clear and detailed way, the ADSF data efficiencies and I am pleased to say these changes were included as part of the recent AOS 5.1 release.

Now what Nutanix users see in PRISM “Storage” view is (as shown below):

- A Capacity optimization overview

- Data reduction ratio which is made up of deduplication, compression and erasure coding savings*.

- Data reduction savings which is a total GB/TB/PB value from data reduction

- An Overall Efficiency ratio which is a combination of Data Reduction, Cloning and Thin Provisioning

*Metadata copies/snapshops/pointers etc are not included in the deduplication value as they are not deduplication.

The resulting summary is very clear and easy to understand so customers can see what efficiencies are from data reduction, and which savings (which typically form by far the largest “efficiency”) come from Cloning and thin provisioning.

One major item which will be included in an upcoming release is zero suppression. Zero suppression is a capability which has been in Nutanix Distributed Storage Fabric since Day 1 and it avoids unnecessarily storing zeros, instead storing metadata which achieves the same outcome but is much higher performance and uses much less capacity.

Nutanix snapshots or pointer based copies (depending on how you refer to them) are also not included in the overall efficiency number, however these will also be included as a seperate line item in a future release as we aim to be very clear regarding what data efficiencies a customer is achieving with Nutanix.

Some vendors recommend Eager Zero Thick (EZT) VMDKs on vSphere, and then deduplicate the zeros which artificially increases the deduplication ratio. Nutanix does not do this as it’s inefficient to create more data to deduplicate when you can simply avoid writing the data in the first place. However we do plan to report the savings from Zero suppression as a seperate line item as it is a value our platform provides.

For a more detailed view, Nutanix customers can dive down into the storage,Diagram view where admins can view of each containers data efficiency breakdown (as shown below).

As we can see, Nutanix is very transparent showing what data reduction features are enabled, what ratio is being achieved, the total, used, reserved and even Thick Provisioned storage with an effective free based on physical multiplied by data reduction ratio and an overall efficiency value.

Now that we’ve covered off how Nutanix measures and reports on data reduction/efficiency, I’d like to highlight a critical factor when discussing data reduction/efficiency and that is that data efficiency is totally dependant on the individual customers data. For the same dataset, the difference between vendors with the same capabilities, e.g.: Deduplication, Compression and Erasure Coding (EC-X) are unlikely to be vastly different (or better put, change a business outcome one way or another) despite what each vendor will say about their implementation of such technologies.

In short: The biggest factor in the achieved data reduction is not the vendor, it’s the customer data.

With that said, if you’re comparing HPE SVT and Nutanix, then there is a pretty major delta between the two products in terms of capabilities and that is because Nutanix supports Erasure Coding (EC-X) and HPE SVT does not.

As a result, Nutanix has a major advantage as Erasure Coding in the Nutanix Acropolis Distributed Storage Fabric (ADSF) is complimentory to both deduplication and compression.

Unlike Compression and Deduplication, Erasure Coding can provide savings (or another way to look at it would be lower data redundancy overheads) regardless of the data type.

So where Deduplication and Compression will get minimal/no savings for data such as Video files, Erasure Coding still provides savings so the delta between Nutanix and HPE SVT will only increase in Nutanix favour the less the customer data will dedupe and/or compress.

HPE SVT on the other hand has a RAID (RAID 6 being N-2 usable or RAID 60 being N-4 usable) overhead and on top of that, use replication (2 copies / 50% usable) for an usable capacity (of raw) of well below 50% depending on the number of drives per node.

Nutanix, using RF2 and EC-X provides between 50% (minimum) and 80% (maximum) usable capacity of RAW and with RF3 (N+2) between 33% (minimum) and 66% (maximum) usable excluding the benefits of compression and deduplication.

The next major factor in data efficiency ratios is how they are measured!

In Part 1 I have already covered how misleading HPE SVT’s 10:1 efficiency guarantee is, and this is a great example of why it can be difficult to compare apples/apples between vendors. Nutanix on the other hand does not measure data efficiency in the same misleading manner.

In Summary:

- Nutanix AOS 5.1 has comprehensive data reduction/efficiency reporting within the PRISM HTML GUI

- Nutanix data reduction capabilities exceed that of HPE SVT as both products have Dedupe and Compression, but Erasure Coding (EC-X) is only supported on Nutanix

- All data reduction capabilities on Nutanix are complimentory, so Dedupe , Compression and Erasure Coding can all work together to maximise savings.

- Erasure Coding provides data reduction even for data which is not compressible or dedupeable

- Nutanix data efficiency stats are easily visible in the PRISM GUI and are much more detailed than HPE SVT

Return to the Dare2Compare Index:

But wait, there’s more!

As far as data reduction results are concerned, they are all over twitter and a simple search comes up with many examples. The first one being my favorite. Not because of the data reduction ratio itself but because it shows one of the major values of a 100% software solution where a simple software upgrade (which is one-click rolling, non-disruptive) provided the customer a significantly higher data reduction ratio. So basically, the customer got more capacity for free!

Note: None of the below show the latest data efficiency reporting capabilities from AOS 5.1.

I upgraded Nutanix AOS from 4.7.2 to 5.0. After upgrade, about 1.5 TB storage space saved. pic.twitter.com/TH9IkRDeLs

— Taner Çort (@tanercort) February 14, 2017

Here are a few other examples which I found using this Twitter search:

Real World Data Reduction on @nutanix Hybrid Clusters. Windows Top, Linux Bottom, 32 Nodes Each, Compression Only. pic.twitter.com/kXnHVDR4gg

— Lord WebScale Webster (@vcdxnz001) August 2, 2016

Updated our #nutanix production cluster last week from AOS 4.7.3 to 5.0.0.2 and got 1 TB additional disk savings. 1.89>2.02:1 reduction. pic.twitter.com/PDDJXjSlEs

— Mattias Sundling (@msundling) January 23, 2017

Ppl ask me compression Nutanix offers.I spunup 250 win 2K8R2 & this is what I got.2 things to look at usage & compression @nutanix #HCI pic.twitter.com/ADTFfaeuRB

— shankar (@glkshankar) January 30, 2017

Exemple de #compression et #deduplication grâce à l' #hyperconvergence #nutanix https://t.co/UXm2KVVwNu #reallife #sysadmin #dsi pic.twitter.com/lw3qioFcg0

— SIIUM (@siium_FR) January 19, 2017