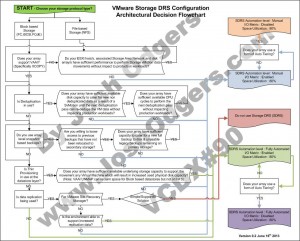

I was speaking to a number of people recently, who were trying to come up with a one size fits all Storage DRS configuration for a reference architecture document.

As Storage DRS is a reasonably complicated feature, it was my opinion that a one size fits all would not be suitable, and that multiple examples should be provided when writing a reference architecture.

A collegue suggested a flowchart would assist in making the right decision around Storage DRS, so I took up the challenge to put one together.

The below is my version 0.1 of the flowchart, which I thought I would post and hopefully get some good feedback from the community, and create a good guide for those who may not have the in-depth knowledge or experience, too choose what should be in most cases an appropriate configuration for SDRS.

This also compliments some of my previous example architectural decisions which are shown in the related topic section below.

As always, feedback is always welcomed.

I hope you find this helpful.

* Updated to include the previously missing “NO” option for Data replication.

Related Articles

1. Example Architectural Decision – Storage DRS configuration for NFS datastores

2. Example Architectural Decision – Storage DRS configuration for VMFS datastores