When I saw a post (20+ Common VSAN Questions) by Chuck Hollis on VMware’s corporate blog claiming (extract below) “stunning performance advantage (over Nutanix) on identical hardware with most demanding datacenter workloads” I honestly wondering where does he get this nonsense?

Then when I saw Microsoft Applications on Virtual SAN 6.0 white paper released I thought I would check out what VMware is claiming in terms of this stunning performance advantage for an application I have done lots of work with lately, MS Exchange.

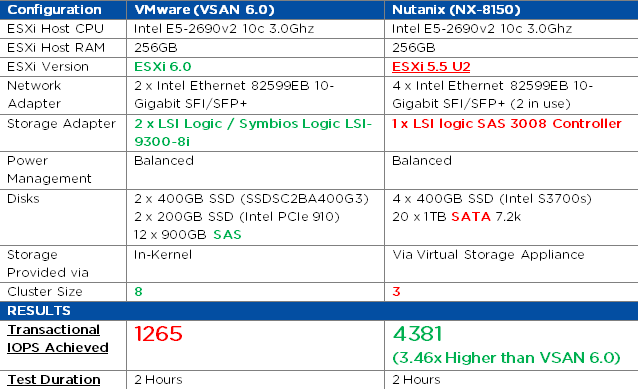

I have summarized the VMware Whitepaper and the Nutanix testing I personally performed in the below table. Now these tests were not exactly the same, however the ESXi Host CPU and RAM were identical, both tests used 2 x 10Gb as well as 4 x SSD devices.

The main differences were ESXi 6.0 for VSAN testing and ESXi 5.5 U2 for Nutanix, I’d say that’s advantage number 1 for VMware, Advantage Number 2 is VMware use two LSI controllers, my testing used 1, and VMware had a cluster size of 8 whereas my testing (in this case) only used 3. The larger cluster size is a huge advantage for a distributed platform, especially VSAN since it does not have data locality, so the more nodes in the cluster, the less chance of a bottleneck.

Nutanix has one advantage, more spindles, but the advantage really goes away when you consider they are SATA compared to VSAN using SAS. But if you really want to kick up a stink about Nutanix having more HDDs, take 100 IOPS per drive (which is much more than you can get from a SATA drive consistently) off the Nutanix Jetstress result.

So the areas where I feel one vendor is at a disadvantage I have highlighted in Red, and to opposing solution in Green. Regardless of these opinions, the results really do speak for themselves.

So here is a summary of the testing performed by each vendor and the results:

The VMware white paper did not show the Jetstress report, however for transparency I have copied the Nutanix Test Summary below.

The VMware white paper did not show the Jetstress report, however for transparency I have copied the Nutanix Test Summary below.

Summary: Nutanix has a stunning performance advantage over VSAN 6.0 even on identical lesser hardware, and an older version of ESXi using lower spec HDDs while (apparently) having a significant disadvantage by not running in the Kernel.

![[activities]Saurus3_414x2](http://www.joshodgers.com/wp-content/uploads/2015/04/activitiesSaurus3_414x2.jpg)