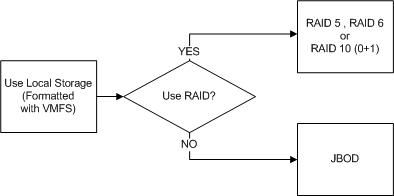

As discussed in Part 7, Local Storage is probably the most basic form of storage we can present to ESXi and use for Exchange MBX/MSR VMs.

The below screen shot shows what local storage can look like to an ESXi host.

As we can see above, the highlighted datastore is simply an SSD formatted with VMFS5. So in this case a single drive not running RAID, and therefore in the event of the drive failing, any data on the drive would be permanently lost.

Note: The above image is simply an example. In reality multiple drives most likely SAS or SATA would be used as SSD is unnecessary for Exchange.

In some ways this is very similar to a physical Exchange deployment on JBOD storage and I would like to echo the recommendations Microsoft give for JBOD deployments from the Exchange 2013 storage configuration options guide and say for JBOD deployments, I strongly recommend at least 3 database copies.

As per the recommendation in Part 4 (DRS), MS Exchange MBX/MSR VMs should always run on separate ESXi hosts to ensure a single host failure does not potentially cause an issue for the DAG. This is especially important because if two Exchange servers shared the same ESXi host and local storage, a single ESXi host outage could cause data loss and downtime for part or all of the Exchange environment.

The below is a screen shot from the Exchange 2013 storage configuration options guide showing the recommendations based on RAID or JBOD deployments. In my option these recommendations also apply to virtualized Exchange deployments on Local storage.

Another option is to use Local Storage in a RAID configuration to eliminate the Single Point of Failure (SPOF) of a single drive failure.

Again, I agree with Microsoft’s recommendations and suggest at least two database copies when using a RAID configuration and again, each Exchange VM must run on its own ESXi host on dedicated physical disks.

Note: The RAID controller itself is still a SPOF which is why multiple copies is recommended from both an availability and data protection perspective.

Let’s now discuss the pros and cons for using Local Storage with JBOD for your Virtualized Exchange Deployment.

PROS

1. Generally lower cost per GB than centralized storage (e.g.: SAN)

2. Higher usable capacity per drive compared to RAID or centralized storage configurations using RAID or other propitiatory data protection techniques.

3. Local JBOD Storage formatted with VMFS is a fully supported configuration

CONS

1. No protection from data loss in the event of a JBOD drive failure. Note: For non DAG deployments, RAID and 3rd party backups should always be used!

2. Performance/Capacity in JBOD deployments is limited to the capabilities of a single drive.

3. Loss of Virtualization functionality such as HA / DRS and vMotion (without performing a Storage vMotion every time)

4. Can be difficult/costly to scale when nearing capacity.

5. Increased Management (Operational) overheads managing decentralized storage

6. At least 3 database copies are recommended, requiring more Exchange MBX/MSR servers.

7. Little/no protection against data corruption which may lead to all DAG copies suffering corruption. Note: If the corruption is not discovered in time, LAGGED copies can also be compromised.

8. Capacity cannot be shared between between ESXi hosts which may lead to inefficient use of the available capacity.

Next here are some pros and cons for using Local Storage with RAID for your Virtualized Exchange Deployment.

PROS

1. Generally lower cost per GB than centralized storage (e.g.: SAN)

2. A single drive failure will not cause data loss or a DAG failover

3. Performance is not limited to a single drives capabilities

4.Local Storage with RAID formatted with VMFS is a fully supported configuration

5. As there is no data loss with a single drive failure, less database copies are required (2 instead of >=3 for JBOD)

CONS

1. Increased Management (Operational) overheads managing decentralized storage

2. Performance/Capacity is limited to the capabilities of a single drive

3. Loss of Virtualization functionality such as HA / DRS and vMotion (without performing a Storage vMotion every time)

4. Little/no protection against data corruption which may lead to all DAG copies suffering corruption. Note: If the corruption is not discovered in time, LAGGED copies can also be compromised.

5. Capacity cannot be shared between ESXi hosts which may lead to inefficient use of the available capacity

6. Performance is constrained by a single RAID controller / set of drives and can be difficult/costly to scale when nearing capacity.

For more information about data corruption for JBOD or RAID deployments, see “Data Corruption“.

Recommendations:

1. When using local storage, (JBOD or RAID), as per Part 4, run only one Exchange MBX/MSR VM per ESXi host

2. Use dedicated physical disks for Exchange MBX/MSR VM (i.e.: Do not share the same disks with other workloads)

3. Store the Windows OS / Exchange application VMDK on local storage which is configured with RAID to ensure a single drive does not cause the VM an outage.

4. Ensure ESXi itself is install on local storage configured with RAID (and not a USB key) as the Exchange VM is dependant on that host and is not protected by vSphere HA. Nor is it easily/quickly portable due to the storage not being shared.

Summary:

Using Local Storage in either a JBOD or RAID configuration is fully supported by Microsoft and is a valid option for MS Exchange deployments.

In my opinion Local Storage deployments have more downsides than upsides and I would recommend considering other storage options for Virtualized Exchange deployments.

Other options along with my recommended options will be discussed in the next 3 parts of this series.

Back to the Index of How to successfully Virtualize MS Exchange.

~ Post Updated January 2nd 2015 Thanks to feedback from @zerszenyi ~