I wrote a post in April 2015 titled “Peak Performance vs Real World Performance” which discusses how benchmarks are not realistic and the performance shown in benchmarks can rarely be reproduced with real workloads. It has been one of my most popular posts, and I have had overwhelmingly positive feedback, with only a select few still pushing unrealistic peak performance benchmarks as being of value to customers.

I thought I would whip up a post showing an example of benchmarks vs real world performance requirements using MS Exchange Jetstress on Nutanix.

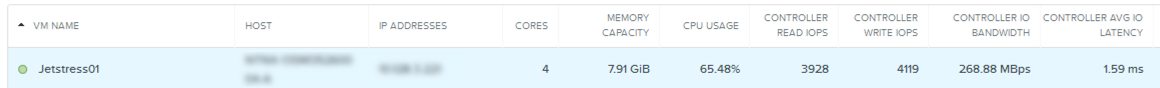

The below is a screen shot from Nutanix PRISM HTML based GUI showing a Virtual Machines Read/Write IOPS , bandwidth and latency during a MS Exchange Jetstress benchmark.

The screen shot shows ~4000 Read IOPS and ~4000 Write IOPS at a latency of 1.59ms.

But what does the above really tell us and what does it mean to a customer?

I’ve been quoted as saying “Benchmarks are of little value without context specific to customer requirements!” and I stand by this statement.

Let’s now look at an example of a real customers requirement:

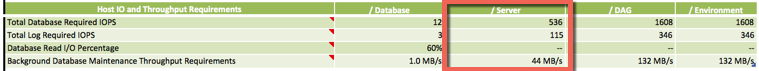

The below is from the Exchange server role requirements calculator and it is a screen shot from the Role requirements tab which shows an estimate of the IOPS required for the Databases and Logs for a single Exchange instance.

It shows the required IOPS being 536 for the databases and 115 for the logs.

Note: The sizing calculator was for an environment supporting 20000 mailboxes across 3 mailbox servers. As such, the above IO requirements are for ~6666 users.

So now that we have done the MS Exchange solution sizing (shown above is just the storage performance requirements), we understand the requirement to be around 651 mixed Read/Write IOPS per mailbox VM. We can then take a benchmark such as Jetstress and validate that the solution has sufficient storage performance.

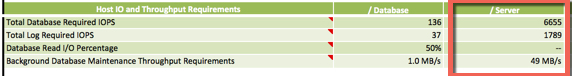

To require the ~8000 IOPS the Jetstress test showed, we would need to scale up each Exchange instances to support have a much larger number of users and have each user send/receive 500 emails per day to reach this requirement.

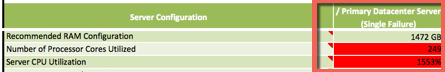

But in scaling up each Exchange instance to reach the peak IOPS that even this 3 year old generation Nutanix node can deliver we would vastly exceed the compute sizing recommendations for Exchange 2013 (being 24vCPUs and 96GB RAM) as shown by the calculator below.

As we can see, for an Exchange instance to require those peak IOPS, we would have to size the Mailbox server VMs with more than 10x the recommended vCPUs (24) and 15x the RAM (96GB). This shows that peak IOPS which can be achieved are not relevant in the real world.

In fact, Exchange generally does not require more than 1000 IOPS. Typically its requires much less, as my earlier example shows. So peak performance numbers are of little/no value as they can’t (and more importantly don’t need to be) reproduced in the real world.

With a tool like Jetstress we can configure a precise Mailbox profiles and test only what you require. If the solution can produce more IOPS than what you need (such as in this example), that’s fine for headroom, but in this day and age where Nutanix allows you to quickly and easily scale (Compute/Storage performance & capacity), I recommend designing for what you need in the foreseeable future (by this I mean 6-12 months) and scale if/when required.

What a benchmark does help you understand is how much headroom a solution has over and above your requirements which can help choose a solution to support mixed workloads, BUT the benchmark would need to be re-ran concurrently with suitable benchmarks for all other applications you intend on mixing to see how the solution behaves with mixed workloads.

As such, single application peak performance benchmarks are almost never valuable (to customers) unless your planning to run application specific silos. I strongly recommend anyone considering implementing an application specific silo, read the following article: Enterprise Architecture & Avoiding tunnel vision.

And… if you’re planning to run application specific silos and/or scaling up workloads to the point they need crazy IOPS, then you’re increasing the size of your failure domains, CAPEX and OPEX which is only doing yourself (or your customer) a disservice. But that’s a topic for another day.

I hope this example shows how real world requirements and performance is vastly different to what a benchmark shows and why peak performance benchmarks should be taken with a grain of salt.

I’ve always said the focus should be on gathering requirements and delivering on business outcomes, not focusing on performance which is typically only a very small part of a solution that delivers a successful business outcome.

Summary:

When sizing an MS Exchange solution on Nutanix, IOPS is not a constraining factor even for large scale deployments. The most common constraining factor is the Microsoft recommended compute maximums being 24 vCPUs and 96GB RAM, which is the same constraint regardless of if you run on Nutanix, or any other virtual / physical platform.

Related Articles: