The Location of the Virtual Machine swap file can be critical when deploying vSphere with traditional centralized storage solutions, or legacy solutions which acknowledge “zeros” or “White-space” as the Virtual Machine swap file can be as large as the VMs configured vRAM where Memory Reservations are not used.

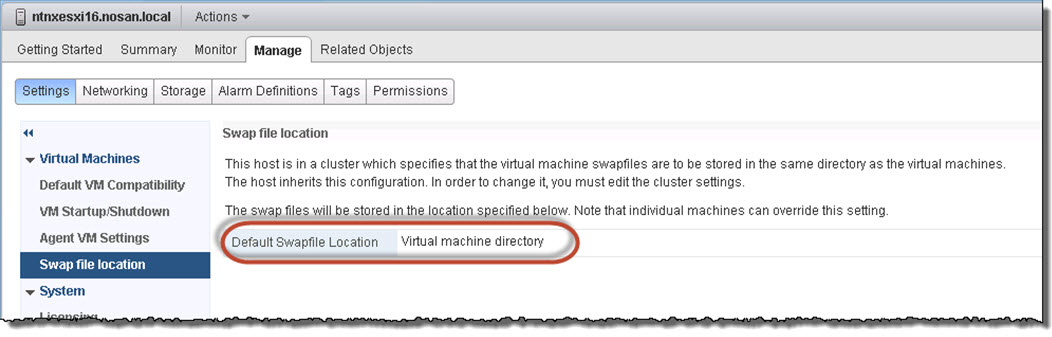

The below shows the default configuration.

If a VM resides on Tier 1 storage for example, and the VM does not have a memory reservation set (or a reservation of less than 100%), the Swap-file will take up valuable Tier 1 storage capacity.

This can be avoided by specifying a Swap-file datastore however this introduces complexity and in the event the Swap-file datastore is on a low tier of storage, performance in the event of swapping will degrade significantly.

Some platforms recommend having different datastores for VM swap files to minimize the overheads on de duplication or replication for environments using SRM as discussed in Example Architectural Decision – Virtual Machine Swap-file location for SRM Protected VMs.

The Nutanix Distributed File System does not write “White space” to disk, as a result the impact of Virtual Machine swap files is negligible which makes the issue of swap file placement much less of an issue.

The only time when Virtual machine swap files will use storage capacity in the Nutanix Distributed File System is when host memory utilization is >100% and swapping needs to occur.

As such, the default vSphere configuration of “Virtual Machine Directory” is ideal for Nutanix environments and valuable storage capacity is not unnecessarily wasted resulting in increased usable space, reduced complexity by removing the requirement for dedicated swap-file datastores without compromising the benefits of de-duplication and compression.